- About Us

-

Research Report

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- AI Agent

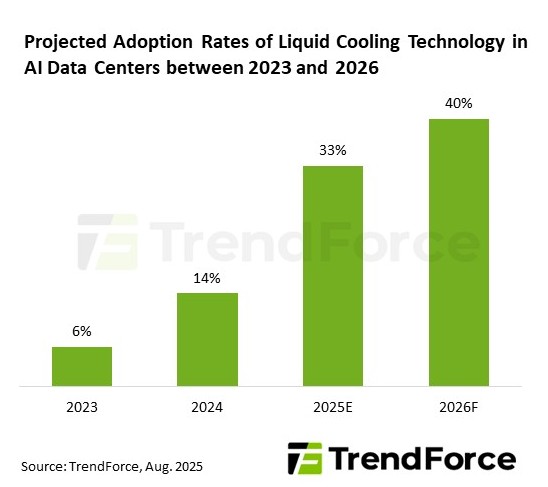

TrendForce’s latest research on the liquid cooling industry reveals that the rollout of NVIDIA’s GB200 NVL72 rack servers in 2025 will accelerate AI data center upgrades, driving liquid cooling adoption from early pilot projects to large-scale deployment. Penetration in AI data centers is projected to surge from 14% in 2024 to 33% in 2025, with continued growth in the following years.

TrendForce notes that power consumption for GPUs and ASIC chips in AI servers has risen sharply. For example, NVIDIA’s GB200/GB300 NVL72 systems have a TDP of 130–140 kW per rack, far exceeding the limits of traditional air-cooling systems. This has prompted the early adoption of liquid-to-air (L2A) cooling technologies.

L2A will serve as the mainstream transitional solution in the short term due to constraints in existing data center infrastructure and water circulation systems. However, as next-generation data centers come online starting in 2025—and as AI chip power consumption and system density continue to rise—liquid-to-liquid (L2L) cooling is expected to gain rapid traction beginning in 2027, offering higher efficiency and stability. Over time, L2L is poised to replace L2A as the dominant cooling solution in AI facilities.

The four leading North American CSPs are ramping up investments in AI infrastructure, with new data center expansions underway across North America, Europe, and Asia. CSPs are also building facilities with liquid-cooling-ready designs.

Google and AWS have already launched modular data centers with liquid cooling pipelines in the Netherlands, Germany, and Ireland. Meanwhile, Microsoft is running pilot deployments in the U.S Midwest and Asia, with plans to make liquid cooling a standard architecture starting 2025.

TrendForce points out that the rise of liquid cooling is fueling demand for cooling modules, heat exchange systems, and related components. Cold plates, as the core component for direct-contact heat exchange, are supplied by Cooler Master, AVC, BOYD, and Auras. Three of these suppliers (excluding BOYD) have expanded liquid cooling production capacity in SEA to meet strong demand from U.S. CSP clients.

Coolant distribution units (CDUs)—the core modules responsible for heat transfer and coolant flow—are generally classified into sidecar and in-row designs. Sidecar CDUs currently dominate the market, led by Delta, while in-row CDUs, supplied primarily by Vertiv and BOYD, offer stronger cooling performance suited for high-density AI rack deployments.

Quick disconnects (QD) are critical connectors in liquid cooling systems, ensuring airtightness, pressure resistance, and reliability. In NVIDIA’s GB200 project, international leaders such as CPC, Parker Hannifin, Danfoss, and Staubli have gained an early advantage with proven certifications and high-end application expertise.

For more information on reports and market data from TrendForce’s Department of Semiconductor Research, please click here, or email the Sales Department at SR_MI@trendforce.com

For additional insights from TrendForce analysts on the latest tech industry news, trends, and forecasts, please visit https://www.trendforce.com/news/

Subject

Related Articles

Related Reports