Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] Memory Price Surge Reportedly to Push Samsung, SK hynix Gross Margins Above TSMC in 4Q25

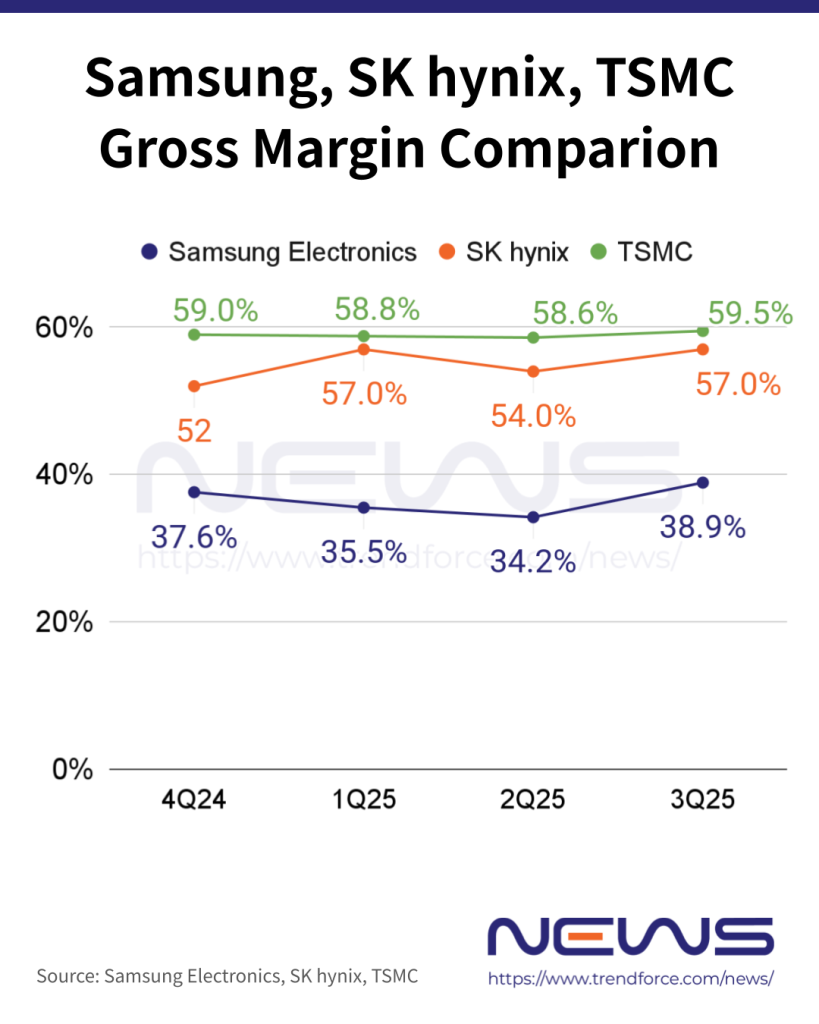

Soaring AI demand is pushing memory prices sharply higher, reshaping profit dynamics across the semiconductor industry. According to Hankyung, Samsung Electronics’ memory division and SK hynix are expected to surpass TSMC in gross margin in the fourth quarter of 2025. This would mark the first time since the fourth quarter of 2018, seven years ago, that memory profitability has overtaken that of foundries.

Sources cited in the report say Samsung Electronics’ memory division and SK hynix are expected to deliver gross margins of roughly 63% to 67% in the fourth quarter, topping TSMC’s official guidance of 60%.

The report also points out that Micron, the world’s third-largest memory maker, announced on the 17th that its gross margin reached 56% in fiscal 2026 first quarter (September–November) and is expected to climb to 67% in the second quarter (December–February). This suggests that Micron is also on track to surpass TSMC in profitability by the first quarter of next year, the report notes.

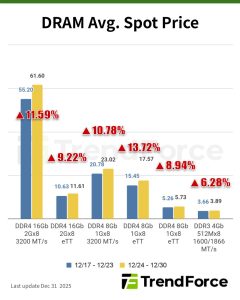

As the report highlights, a sharp increase in prices is the main near-term driver of memory market expansion, as the three major memory makers have allocated about 18% to 28% of their total DRAM production capacity to HBM, which stacks 8 to 16 DRAM dies and tightens supply of general-purpose memory. As a result, prices for standard DRAM have risen by more than 30% quarter on quarter.

From Training to Inference: Memory Takes Center Stage

As memory gross margins are poised to overtake those of foundries, the report notes that the shift is being fueled by growing memory demand as the AI industry transitions from “training” to “inference,” where rapid data storage and retrieval are essential. The report adds that inference applies knowledge gained during training to problem-solving, which in turn requires memory such as HBM to store data and continuously feed it to GPUs.

Demand is also rising rapidly for power-efficient general-purpose memory, even when its performance trails HBM, the report notes. During the early phases of inference, workloads are typically handled by general-purpose DRAM such as GDDR7 and LPDDR5X, while HBM is reserved for more intensive inference tasks. NVIDIA’s use of GDDR7 in inference-focused AI accelerators serves as a representative example, the report adds.

Meanwhile, memory companies plan to sustain the memory-centric era by developing high-performance products tailored for AI, the report notes. One example is processing-in-memory (PIM), which enables memory to handle part of the computational workload traditionally performed by GPUs. The report adds that technologies such as vertical channel transistor (VCT) DRAM and 3D DRAM, which boost data density by storing more information in a smaller area, are also expected to enter the market.

Read more

- [News] Micron Reveals Three Culprits Behind Memory Crunch—and Why It Won’t Ease Soon

- [News] Memory Price Rally May Run Past 2028 as Samsung, SK hynix Reportedly Cautious on Expansion

(Photo credit: Samsung)