Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

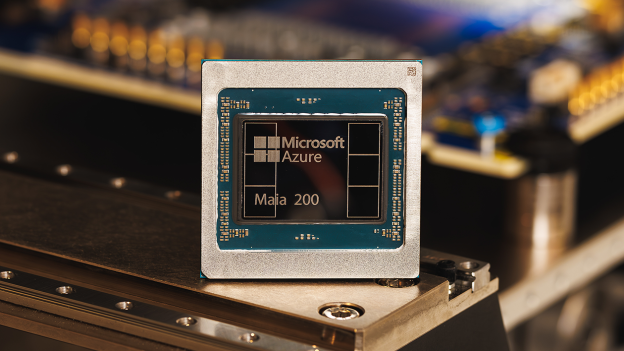

[News] Microsoft Unveils Maia 200 AI Chip on TSMC 3nm; SK hynix Reportedly Sole HBM3E Supplier

Microsoft unveiled its latest in-house AI chip, Maia 200, marking a further push into custom silicon for data-center AI workloads. According to the company’s press release, the chip is fabricated on TSMC’s 3nm process and features native FP8 and FP4 tensor cores designed to enhance performance and efficiency for AI training and inference.

Meanwhile, South Korean outlet Maeil Business Newspaper notes, citing industry sources, that SK hynix is believed to be the sole supplier of high-bandwidth memory (HBM) for the chip. The Maia 200 is said to use 216GB of HBM3E from SK hynix.

Maeil Business Newspaper notes that SK hynix’s potential role as the sole HBM supplier could further intensify competition in the custom AI chip (ASIC) market, sharpening rivalry between South Korea’s two leading memory makers. The report adds that Samsung Electronics is believed to hold a larger share of HBM supply for Google’s TPU lineup.

ASIC Designs Push Toward Larger HBM Capacity

The chip also highlights a broader industry shift toward larger HBM capacities in custom AI accelerator designs. As noted by Maeil Business Newspaper, Microsoft’s previous-generation Maia accelerator was equipped with 64GB of HBM2E. By contrast, according to its press release, Maia 200 significantly expands memory capacity, adopting a redesigned architecture that combines 216GB of HBM3E delivering 7 TB/s of bandwidth with 272MB of on-chip SRAM.

For comparison, the report adds that Google’s TPU v7 Ironwood was unveiled with 192GB of HBM3E, while Amazon Web Services’ latest Trainium3 accelerator features 144GB of HBM, up from 96GB in the prior generation.

Maia 200 Performance and Platform Integration

In terms of performance, Microsoft said Maia 200 delivers three times the FP4 performance of third-generation Amazon Trainium and FP8 performance surpassing Google’s seventh-generation TPU. The company added that Maia 200 is the most efficient inference system it has deployed to date, providing 30% better performance per dollar than the latest generation of hardware currently used across its infrastructure.

Building on those performance gains, Microsoft said Maia 200 is part of its heterogeneous AI infrastructure and will support multiple models, including the latest OpenAI GPT-5.2. Notably, according to Bloomberg, Microsoft is already designing the chip’s successor, Maia 300. The report adds that the company has other options should its in-house efforts falter, including access to OpenAI’s nascent chip designs under their partnership.

The launch of Maia 200 also comes as custom AI accelerators gain traction across hyperscalers. TrendForce notes that the share of ASIC-based AI servers is expected to reach 27.8% by 2026, the highest since 2023, as North American firms such as Google and Meta expand their in-house ASIC efforts. Shipment growth for ASIC AI servers is also projected to outpace that of GPU-based systems.

Read more

- [News] Google Led TPU Innovation with Patent Spike; Broadcom, MediaTek Reportedly Boost Reserved Wafers

- Global AI Server Shipments Forecast to Grow Over 28% YoY in 2026, with a Rising Share of ASIC-Based Systems, Says TrendForce

(Photo credit: Microsoft)