Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] Samsung, SK Reportedly Hike Server DRAM Prices 60-70% – Google, Microsoft in the Queue

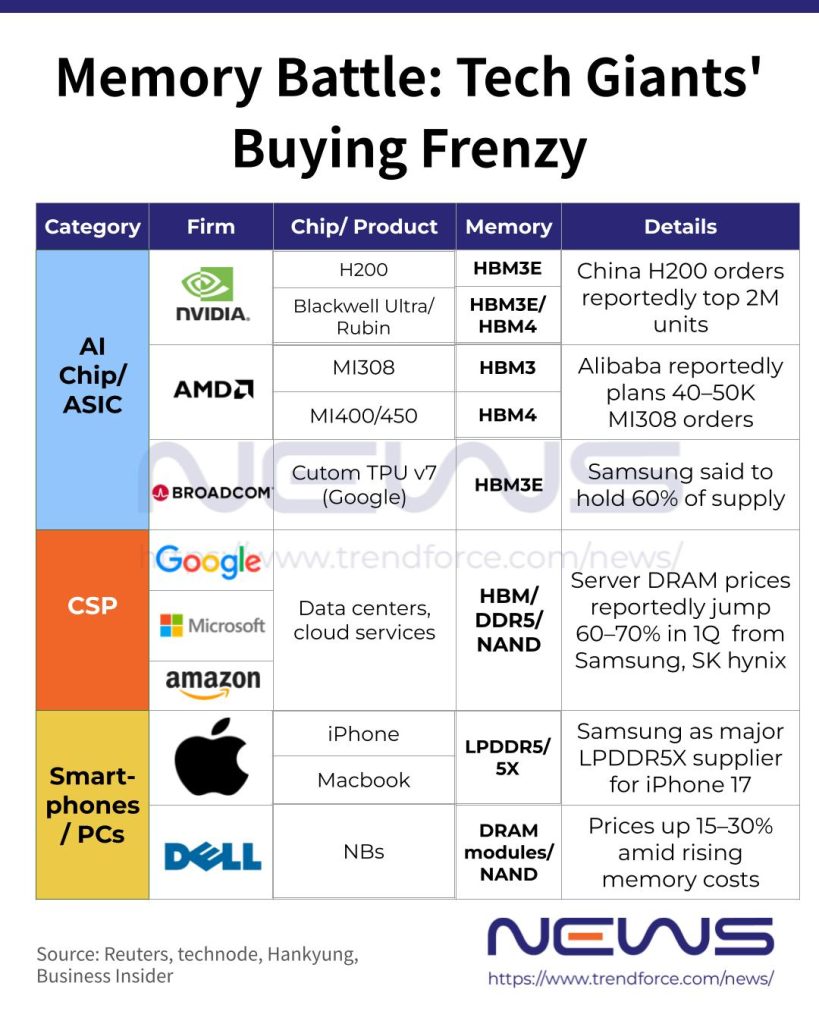

With Jensen Huang officially confirming at CES that all six of NVIDIA’s Rubin chips are back from manufacturing partners and set for a 2026 launch, the memory market is entering another fierce sprint for capacity and pricing. Samsung Electronics and SK hynix, according to Hankyung, have reportedly pitched first-quarter server DRAM prices up 60–70% from Q4 last year to major clients including Microsoft and Google, while PC and smartphone DRAM buyers are seeing comparable hikes.

The memory sector has surged into a full-blown seller’s market, with both South Korean giants rejecting long-term agreements (LTAs) of two to threes years and sticking to quarterly contracts, anticipating stepwise DRAM price increases each quarter through 2027, the report suggests. Earlier in December, Micron also flagged that it has already locked in pricing and volume agreements covering its entire calendar 2026 HBM supply, including HBM4.

HBM Orders on Surge

Notably, Hankyung points to the spike in memory prices being driven by a surge in HBM3E orders from AI accelerator makers. The U.S. government’s green light for NVIDIA’s H200 exports to China acted as a major trigger—each H200 requires eight HBM3E modules, and Chinese customers have reportedly placed $3 billion in new orders since last month, the report adds.

The report echoes an earlier Reuters story, which highlighted that NVIDIA has tapped TSMC to boost production. Chinese firms are said to have ordered more than 2 million H200 units for 2026, while the U.S. chip giant currently has only 700,000 chips in stock, according to Reuters.

In addition, Broadcom, which develops custom AI accelerators like Google’s TPU, is increasing its HBM3E orders as well, further tightening DRAM supply, Hankyung notes.

On the other hand, as per technode, Alibaba is planning to buy 40,000–50,000 AMD MI308 AI accelerators, which have cleared U.S. export approval as well. Each chip reportedly packs 192GB of HBM3 memory and can run long-context inference for 70-billion-parameter LLMs on a single card. At around $12,000, it is roughly 15% cheaper than NVIDIA’s H20, the report noted.

Other Big Techs Join the Queue

However, other tech giants, including Microsoft and Amazon, are reportedly like cats on a hot tin roof, flying to Korea to secure DRAM supply as rising HBM3E production tightens availability and soaring demand for inference AI triggers shortages, Hankyung reports. Business hotels in Pangyo, near Seoul, have been packed with long-stay bookings from procurement teams sent by cloud giants such as Amazon and Google, and device makers including Apple and Dell, the report adds.

According to TrendForce, the boom is expected to continue, as conventional DRAM contract prices in 1Q26 are forecast to rise 55–60% QoQ, while NAND Flash prices are expected to increase 33–38% QoQ.

TrendForce observes that the DRAM supply-demand gap continues to widen as U.S.-based CSPs lock in capacity, forcing other buyers to accept higher prices; server DRAM prices are projected to surge by more than 60% QoQ.

Read more

- [News] Samsung, SK hynix Reportedly Plan ~20% HBM3E Price Hike for 2026 as NVIDIA H200, ASIC Demand Rises

- [News] SK hynix, Samsung Reportedly Deliver Paid HBM4 Samples to NVIDIA Ahead of 1Q26 Contracts

(Photo credit: Samsung)