Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] SOCAMM Ignites a New Memory War After HBM — Samsung and SK hynix Join the Race

With NVIDIA said to be aiming to procure up to 800,000 SOCAMM units this year, a new battleground is emerging among the top three memory giants. U.S.-based Micron appears to be leading the charge, having reportedly started SOCAMM module production for NVIDIA. Meanwhile, Samsung and SK hynix are actively joining the race. Here’s the latest on their progress.

What is SOCAMM?

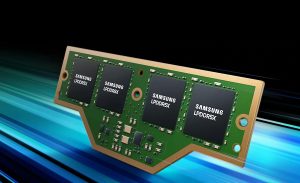

As noted by Hansbiz, SOCAMM (Small Outline Compression Attached Memory Module) is a new type of server memory module that uses low-power DRAM (LPDDR) and targets workloads that existing HBM cannot fully support.

The Korea Herald explains that SOCAMM vertically stacks advanced LPDDR5X chips to enhance data processing performance while significantly reducing energy consumption. For reference, Micron suggests that SOCAMM outperforms traditional RDIMMs by delivering more than 2.5 times the bandwidth, using only one-third the power, and adopting a much smaller 14x90mm form factor.

Notably, with SOCAMM priced at just 25–33% of the HBM chips that power AI GPUs, top memory makers are racing to seize an early lead in this emerging server module market, as per the report.

Samsung’s SOCAMM2 under Development; SK hynix Showcases at Intel AI Event

Citing Samsung’s 2025 Sustainability Report, Hansbiz reveals that even before SOCAMM’s first generation hits full-scale production, Samsung is already developing SOCAMM2.

According to Hansbiz, Samsung says switching from DDR-based RDIMM to LPDDR5X cuts power use by about 50%. In addition, by boosting I/O channels, SOCAMM2 delivers up to 90% better performance at the same capacity—making it ideal for AI computing, the report adds.

However, Samsung is still working to secure major clients in the field. According to Hansbiz, while NVIDIA recently chose Micron as its first SOCAMM supplier, Samsung is actively negotiating with key AI customers for sample testing.

Meanwhile, SK hynix is stepping up its push to commercialize and promote SOCAMM as well, actively showcasing the technology on the global stage.

According to Hansbiz, SK hynix is deepening partnerships with key players like Intel to lead the AI and custom memory markets, with SOCAMM at the forefront. On July 2, at the 2025 Intel AI Summit Seoul, the company showcased its AI memory lineup—including a 12-layer, 36GB HBM3E (the largest current capacity), a 12-layer HBM4 boasting over 2 terabytes per second data speed, and its SOCAMM modules.

Challenges Ahead

However, challenges remain for SOCAMM to ramp up, as a previous ZDNet report suggested that NVIDIA has postponed the launch of its SOCAMM technology alongside the GB300, prompting supply chain to adjust their schedule. According to ZDNet, SOCAMM is now expected to debut with NVIDIA’s Rubin, scheduled for 2026.

According to ZDNet, the GB300 was initially designed around a new board called Cordelia, which combined SOCAMM memory with two Grace CPUs and four Blackwell GPUs. However, Cordelia reportedly faced reliability issues, including data loss, while SOCAMM itself struggles with thermal and stability challenges.

Read more

- [News] NVIDIA Reportedly to Procure Up to 800,000 SOCAMM Modules in 2025 for AI Product

- [News] Micron Unveils LPDDR5 SOCAMM for NVIDIA GB300, Reportedly Ahead of Rivals