AI Surge Set to Double Data Center Power Consumption

Data centers, as the backbone of Generative AI, HPC (High-Performance Computing), and cloud services, are experiencing a sharp surge in AI server compute demand. This has driven exponential growth in per-rack power consumption and is doubling overall data center power requirements.

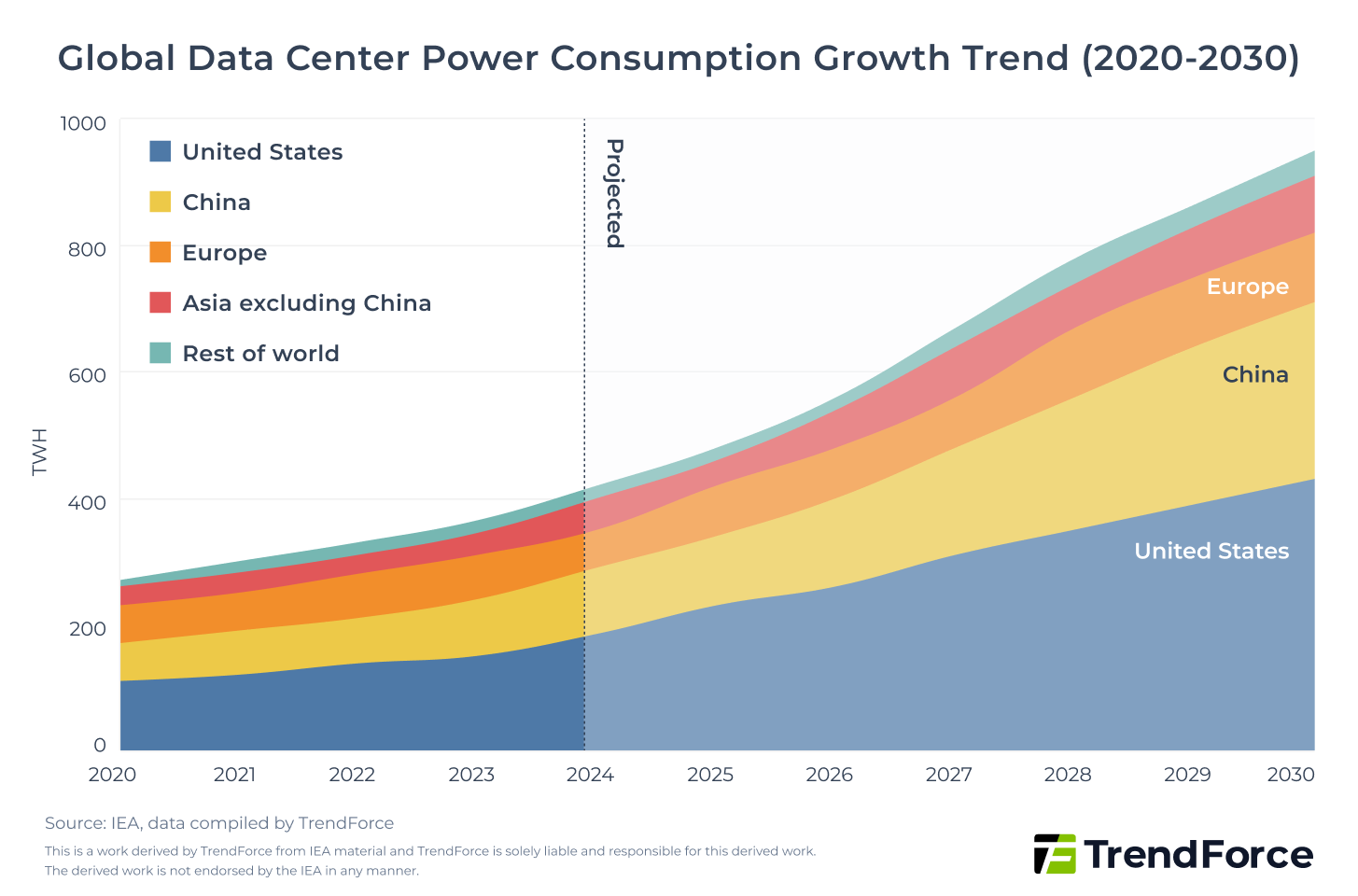

The International Energy Agency (IEA) projects global data center electricity use to reach 415 TWh in 2024 and rise to about 945 TWh by 2030, effectively doubling within six years. The U.S. Department of Energy also estimates data centers’ share of electricity consumption will increase from 4.4% in 2023 to 6.7–12% by 2028. Major economies with concentrated AI workloads, including the U.S., Europe, and China, will continue to push the global electricity demand curve steeply upward.

2026 Outlook: The Era of GW-Level AI Data Centers

Why is 2026 pivotal for GW-scale data centers? OpenAI’s Stargate pushes into infrastructure as AI spending surges, quietly reshaping the cloud landscape.

Explore Market TrendsData Center Power Load and Operational Challenges

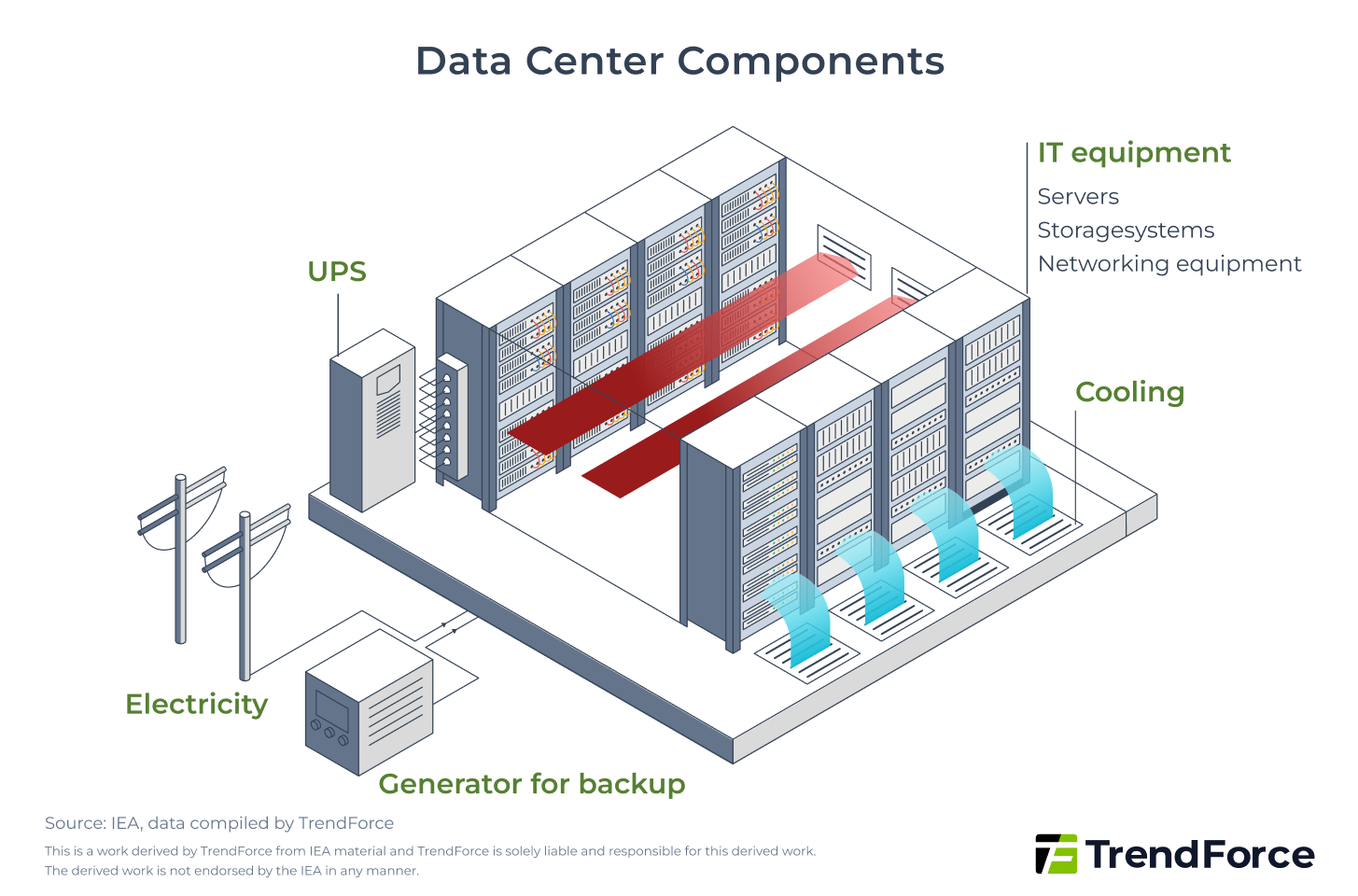

Current data center power consumption is primarily driven by IT equipment (servers, storage systems, and network devices) along with supporting infrastructure such as cooling, UPS (Uninterruptible Power Supply), power conversion, lighting, etc.

As AI model workloads become more compute-intensive, AI servers are driving steep increases in power demand. This is largely due to high-power accelerators, GPUs, TPUs, ASICs, and FPGAs, which are pushing rack power from the traditional 5–10 kW range to more than 30–100 kW, simultaneously straining both power and cooling systems.

For example, NVIDIA’s Rubin Ultra NVL576, expected in late 2027, has a TDP (Thermal Design Power) of around 3.6 kW. A fully configured rack could reach 600 kW, compared to just 10–30 kW in the past, representing an order-of-magnitude leap.

With power demand surging, data center electrical systems often struggle to handle the high variability of AI workloads. UPS backup and distribution architectures frequently lack sufficient capacity or responsiveness, limiting overall energy efficiency and driving up power costs. As hyperscalers continue investing in next-generation infrastructure, the rising power draw of new AI accelerators will place even greater stress on traditional power delivery architectures.

Table: Data Center Power Demand Allocation and Risk Analysis

| IT Equipment & Infrastructure | Power Share (approx.) | Key Risks & Bottlenecks |

|---|---|---|

| Servers | 60% | AI racks exceed legacy distribution limits, causing overload and outage risks |

| Storage Systems | 5% | High-frequency access increases CPU idle power and extends processing time |

| Network Hardware | 5% | Limited specs cause latency, forcing servers into sustained high load |

| Cooling Systems | 20% | Legacy air cooling cannot handle high-density heat, reducing efficiency |

| Other Infrastructure | 10% | UPS/BBU upgrades lagging, lowering conversion efficiency and weakening peak load protection |

(Source: IEA)

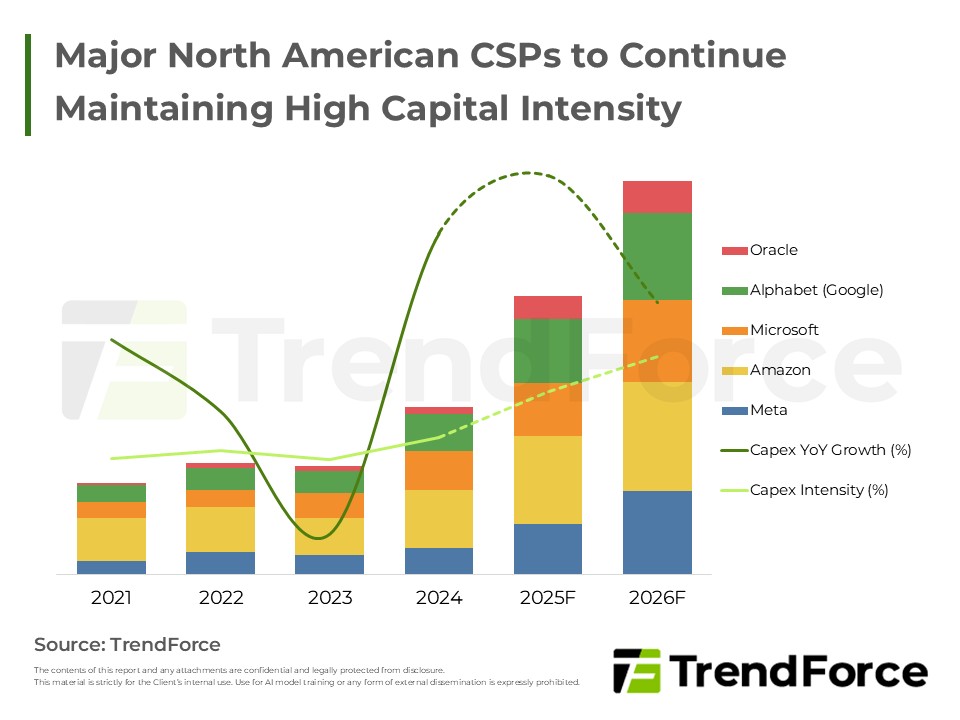

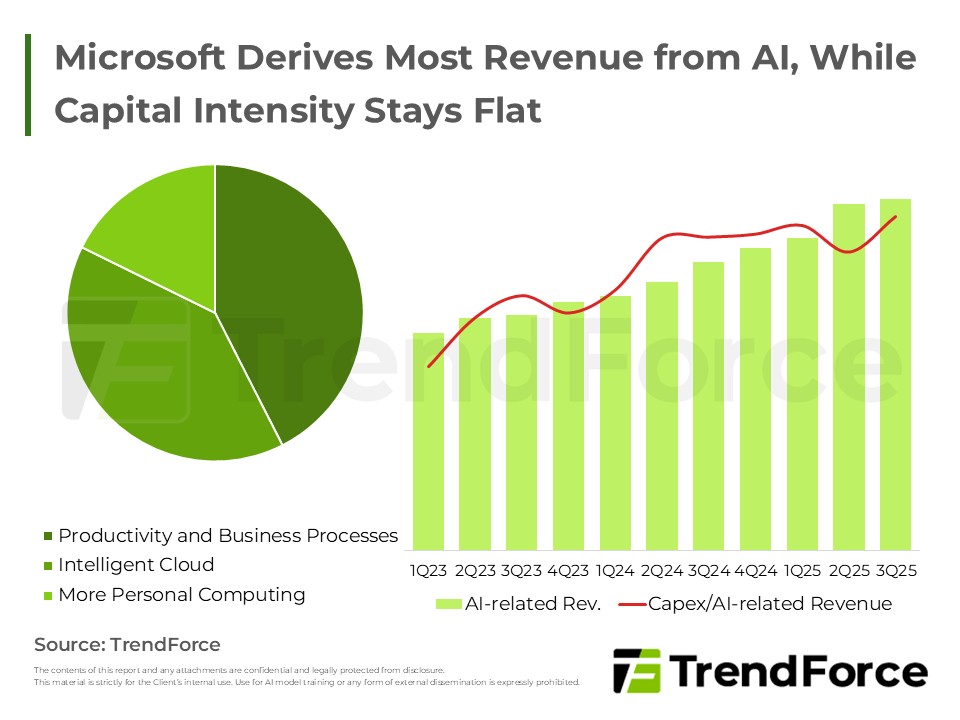

2026 Outlook: Microsoft and Meta’s AI Capital Strategy and Infrastructure Footprints

Microsoft and Meta are racing AI infrastructure build-out. Microsoft leases capacity to ease bottlenecks, while Meta ramps aggressive investment with rapid deployment and open-source strategies—who will seize AI dominance?

Explore CSP StrategiesKey Strategies to Reduce Data Center Power Consumption

PUE (Power Usage Effectiveness) is the global standard metric for measuring data center energy efficiency. An ideal PUE approaches 1.0, indicating most power is used by IT equipment rather than cooling or backup systems. The current industry average is around 1.58.

$$ \mathrm{PUE} = \frac{\text{Total Facility Energy}}{\text{IT Equipment Energy}} $$

In 2024, major CSPs (Cloud Service Providers) have maintained PUE levels around 1.1 and continue optimizing to handle AI-driven high workloads:

- Google reports a PUE of 1.09.

- Microsoft reports a PUE of 1.12 in its "Environmental Sustainability Report".

- Amazon AWS reports a PUE of 1.15.

Against these benchmarks, traditional data centers face structural challenges. With AI workloads growing continuously, achieving cloud-scale PUE requires more than equipment replacement; it demands systematic optimization across power infrastructure and cooling systems. Below are four strategies to improve energy efficiency and reduce power consumption.

1. High-Efficiency Hardware and Infrastructure to Reduce Power Consumption

Traditional data centers are designed for general-purpose computing and are not optimized for the high power density and workload characteristics of AI. This limits energy efficiency and increases cooling pressure. To support Generative AI and HPC, enterprises should adopt next-generation high-efficiency hardware and innovative infrastructure to reduce power consumption at the source while improving compute performance and overall energy efficiency.

- AI Servers with Low-Power Processors and High-Bandwidth Memory:

Traditional AI servers are challenged by high power density, with individual GPUs consuming 400–700W.

Intel, AMD, and NVIDIA have developed high-efficiency CPUs, GPUs, and AI accelerators using advanced processes and architectural optimizations, paired with high-bandwidth memory modules such as HBM, DDR5, and MRDIMM.

AMD’s Instinct MI350 GPU series, launched in June 2025, offers four times the AI compute capacity and 35 times higher inference performance compared with previous generations, significantly reducing energy per unit of computation.

- High-Power Racks (HPR):

Traditional 19-inch racks provide only 5–10kW per cabinet, insufficient for modern AI and HPC workloads.

Major cloud providers (Meta, Google, Microsoft) deploy ORv3-HPR V3 racks from the Open Compute Project (OCP), increasing GPU server density to 300kW per cabinet to support AI model training, HPC, and high-density inference workloads.

Meta’s 2025 OCP EMEA Summit preview of ORv3-HPR V4 racks introduces a ±400V (equivalent to 800V) HVDC solution, pushing cabinet load capacity to 800kW, meeting future large-scale AI compute power needs.

- Liquid and Immersion Cooling:

Traditional air cooling is inefficient for high-density AI servers, consuming 20–25% of a data center’s total power. Leading cloud providers now use liquid cooling as standard.

Microsoft’s April 2025 Nature publication reports that cooling plates and two-phase immersion cooling reduce greenhouse gas emissions by 15–21%, energy demand by 20%, and water usage by 52%. Supermicro’s liquid cooling solutions can cut data center power costs by up to 40%, improving PUE performance.

2H25 & 2026 Global AI Server Market & Supply Chain Outlook

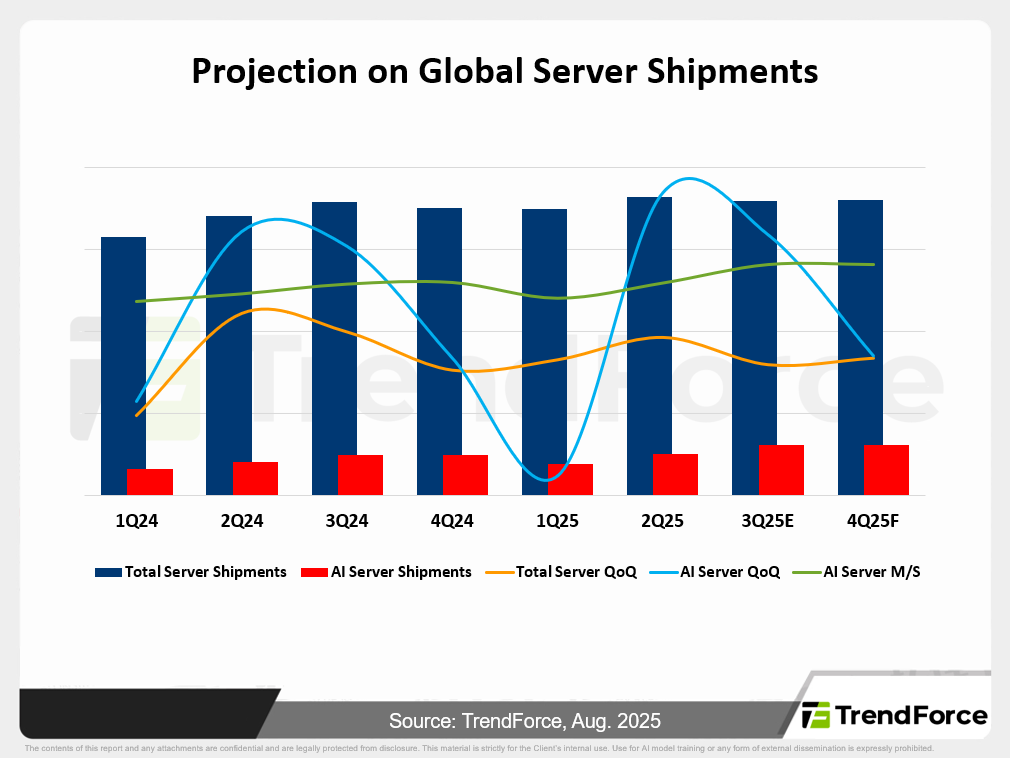

Generative AI is driving explosive demand for AI servers, led by cloud providers. Shipments are set to outpace general-purpose servers, making AI servers the new market focal point. Get Trend Intelligence

2. Power Architecture Innovation to Reduce Transmission and Conversion Losses

Vertiv projects that between 2024 and 2029, AI rack-level power density will surge from 50?kW to 1?MW. Traditional data centers predominantly rely on AC power distribution with centralized UPS systems, which will struggle to meet future power demands. Challenges include insufficient capacity, high energy losses from multiple AC-to-DC and DC-to-AC conversion stages, and compliance with emerging CSP power standards.

HVDC (High-Voltage Direct Current) is a power distribution architecture that delivers high-voltage DC directly to racks, eliminating multiple conversion steps. Delta Electronics estimates that while traditional UPS efficiency is around 88%, a full HVDC chain can achieve approximately 92%. Although the efficiency gain is just 4%, large operators could save roughly $3.6?million annually in electricity costs.

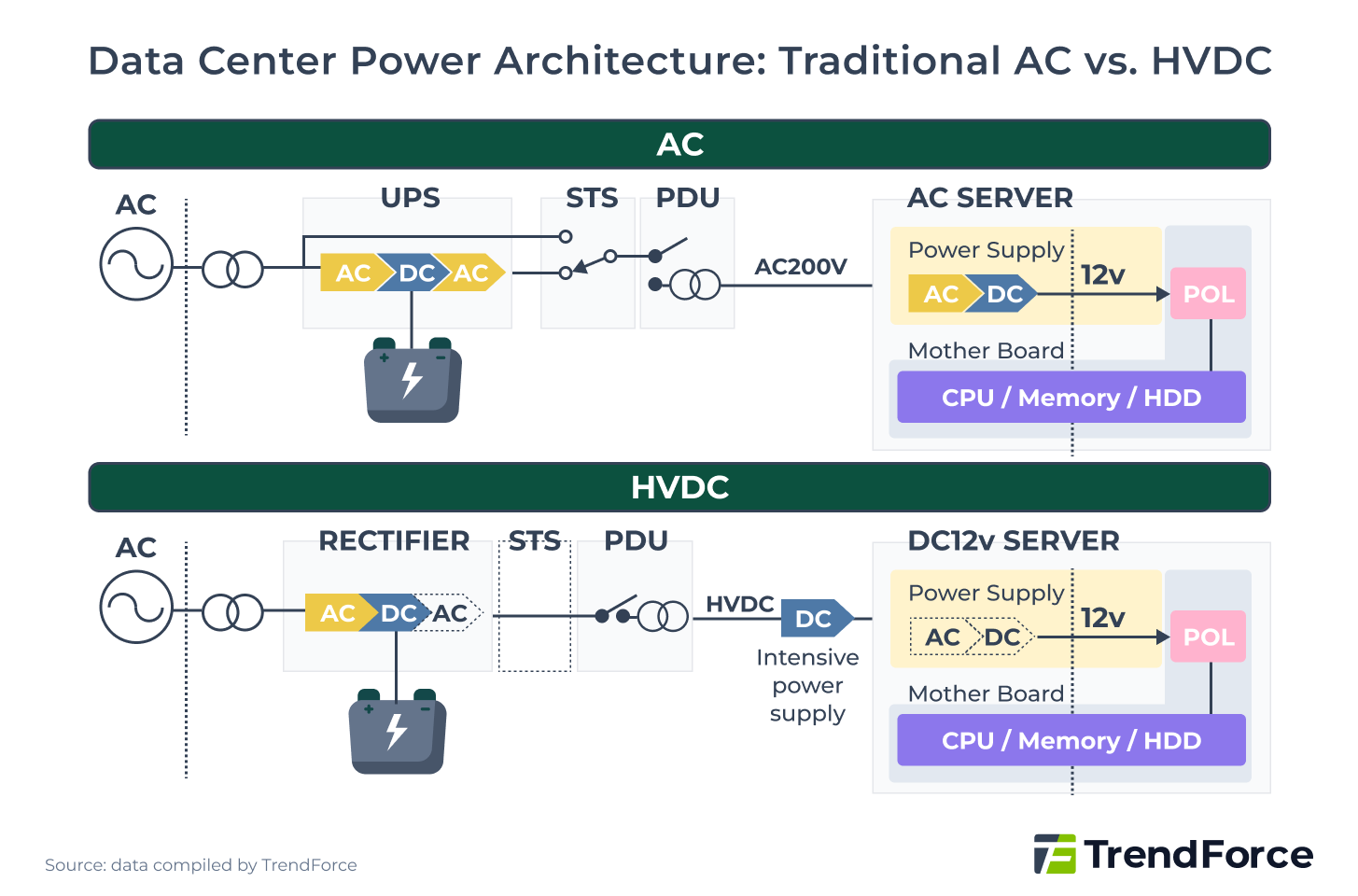

The figure below illustrates the difference between traditional AC and HVDC data center power architectures:

- Traditional AC Power Architecture: AC input → UPS (AC→DC→AC conversion) → STS static transfer switch → PDU distribution panel → server internal power supply (AC 200V→DC 12V)

- HVDC Power Architecture: AC input → Rectifier (AC→DC) → PDU distribution panel → server direct HVDC feed, requiring only DC-to-DC conversion to 12V

The core advantage of HVDC lies in reducing conversion stages, eliminating large UPS units and STS switches, and simplifying the overall architecture. Although CSP leaders and NVIDIA follow different technical paths, they share the same goal: moving HVDC from proof-of-concept to a standard for large-scale data center power delivery.

Transitional Solution: Modular UPS Systems

Before fully adopting HVDC, most data centers still rely on standard AC + UPS setups. Modular UPS systems provide a practical interim solution, leveraging existing AC infrastructure while offering high adaptability and lower implementation costs, benefiting engineers already familiar with traditional architectures.

Modular designs allow for dynamic scaling based on load, addressing the rigidity of conventional monolithic UPS systems. According to Rehlko (2023) post, standard operational modes of modular UPS units can achieve up to 96% online energy efficiency. Schneider Electric reports that modular UPS configurations can reduce rack footprint by approximately 50%, optimizing space utilization. Furthermore, the system maintains operation even if individual modules fail, significantly minimizing downtime risk.

Power Architecture Evolution

Traditional data center power flows through transformers, UPS units, and distribution panels, creating a long conversion path with cumulative losses.

SSTs (Solid-State Transformers) are emerging as a next-generation solution. Instead of routing utility power through multiple conversion stages, SST modules directly rectify incoming AC to output HVDC, eliminating 2–3 conversion steps and reducing energy losses. This streamlines the power delivery system while maintaining high reliability.

Delta Electronics has outlined a development roadmap combining SSTs with HVDC architectures, projecting overall energy efficiency around 92%. SSTs are poised to become a key technology in the evolution of data center power infrastructure.

3. Distributed Backup Systems to Minimize Downtime Risk

Most traditional data centers rely on centralized UPS systems to back up an entire facility. If the UPS fails or experiences a transfer delay, all servers in the affected zone go offline simultaneously. Additionally, UPS capacity is often insufficient to handle sudden AI power spikes, potentially causing brief outages. According to the Uptime Institute 2024 Global Data Center Survey, 23% of downtime events are power-related. Moreover, 54% of operators reported that their most recent major outage caused losses exceeding $100,000, with 20% of incidents surpassing a million.

Battery Backup Units (BBUs) provide a distributed backup solution, located directly at the server or rack level. Being closer to the load, BBUs respond faster than centralized UPS systems. Failures affect only a single rack, offering greater flexibility and reliability.

Table: UPS vs. BBU Backup Systems

| Item | UPS | BBU |

|---|---|---|

| Power Path | AC → DC → AC → IT load | Paralleled with HVDC or 48V DC bus; charges during normal operation, discharges during outage |

| Deployment | Located in electrical room; protects entire row or hall | Installed inside or beside server rack; protects a single rack |

| Battery Type | Lead-acid | Lithium-ion |

| Lifespan | 3–5 years | 5–10 years |

| Failure Impact | Single point failure may cause wide outage | Localized impact; other racks continue running |

| Response Speed | Limited by conversion latency | Close to servers; near-instant response |

Another critical component is Supercapacitors, which buffer sudden power spikes during AI training or inference, stabilizing power delivery and reducing BBU stress. Supercapacitors handle instantaneous demands, while BBUs provide sustained backup. Together, they enhance power system stability and extend equipment lifetime.

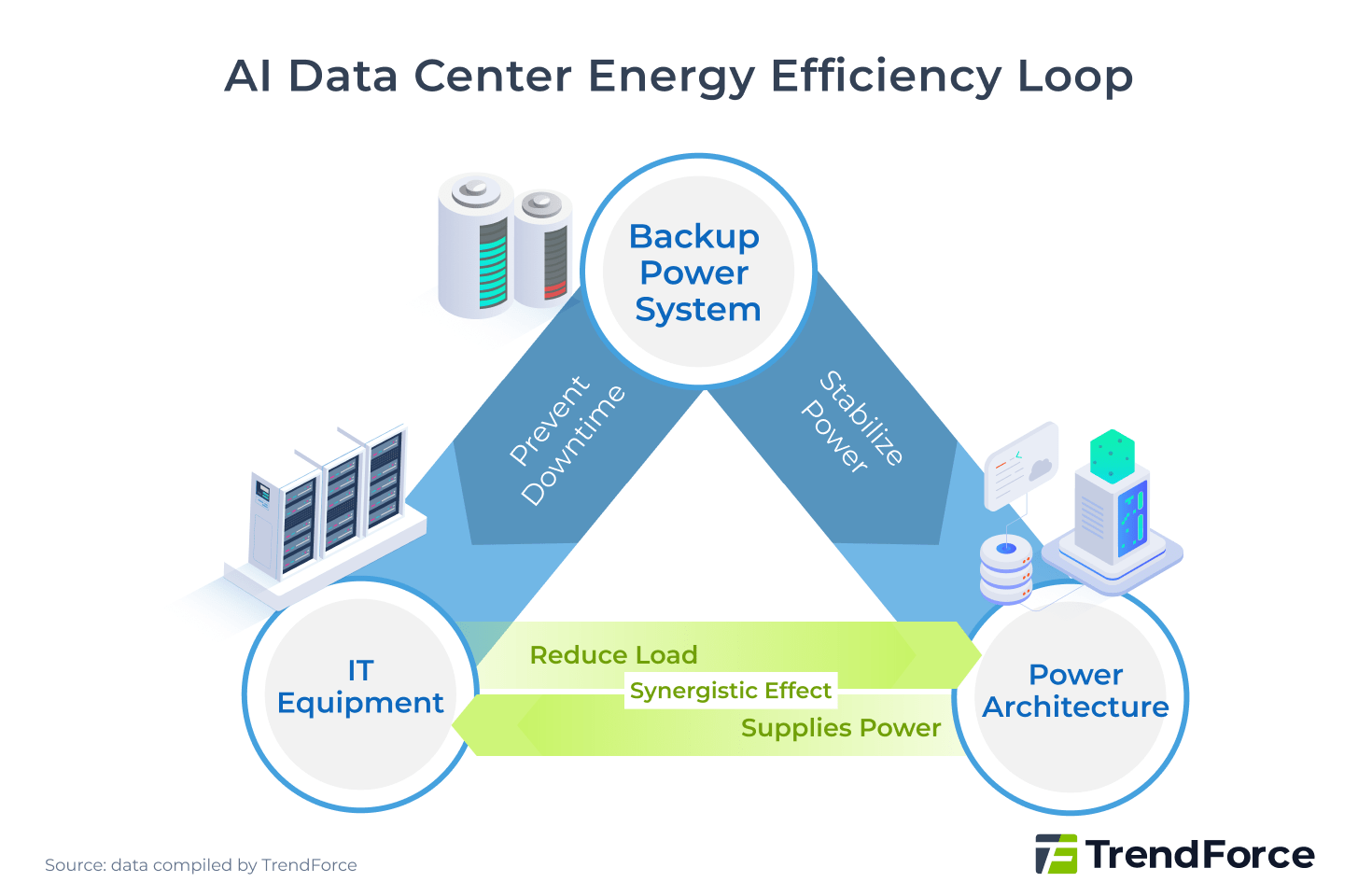

3. Improving Data Center Energy Efficiency: Hardware, Power Architecture, and Intelligent Backup

Optimizing data center energy usage requires a multi-layered approach: upgrading hardware to reduce power consumption, innovating power architecture to improve transmission efficiency, and deploying distributed backup systems to ensure operational stability. These solutions complement each other, addressing demand reduction, efficiency gains, and reliability enhancement, thereby comprehensively improving energy efficiency and PUE.

However, adopting new technologies also presents challenges. Integrating HVDC and distributed backup systems with existing AC infrastructure involves high initial costs, complex system integration, increased training requirements for technical staff, and managing the risks of running old and new systems in parallel. Enterprises should implement a phased adoption strategy, balancing ROI and operational risks, while fostering cross-department collaboration to ensure smooth upgrades.

Energy Storage Powers U.S. Grid Transformation Amid AI Surge

US data center power demand is soaring alongside AI server growth. Explore how the next-gen grid, energy storage, and emerging supply chains are shaping the future. Who will lead the energy race?

Explore Power Grid TrendsAI Leaders with Power Vendors Driving Data Center Power Innovation

Power architecture innovation relies not only on technical breakthroughs but also on industry consortia shaping future standards. As AI and HPC workloads surge, traditional rack architectures struggle to meet these demands. The Open Compute Project (OCP), launched in 2011 by Meta, Intel, and others, plays a key role by redefining data center infrastructure through open-source hardware.

After years of iterative development within the OCP community, the ORv3-HPR series proposed in 2024 serves as a strategic transitional solution for AI power delivery, laying the modular groundwork for future Mt. Diablo HVDC deployments, indicating the industry-wide pursuit of efficient, high-density power delivery solutions.

AI Leaders Split on HVDC Power Approaches

In the ongoing power system overhaul, AI leaders like NVIDIA and the OCP community—comprising major cloud providers such as Meta, Microsoft, and Google—have adopted two primary HVDC approaches.

- OCP ±400V Bipolar Approach

The OCP Mt. Diablo project advances a ±400V HVDC hybrid architecture, retaining a 480 VAC backbone while converting to ±400 VDC only at standalone power cabinets for cross-rack server delivery. This allows IT rack power to scale from 100 kW up to 1 MW. While less efficient than an 800V full-DC system, it leverages a relatively mature and flexible technology, serving as a strategic transitional step toward a full DC architecture.

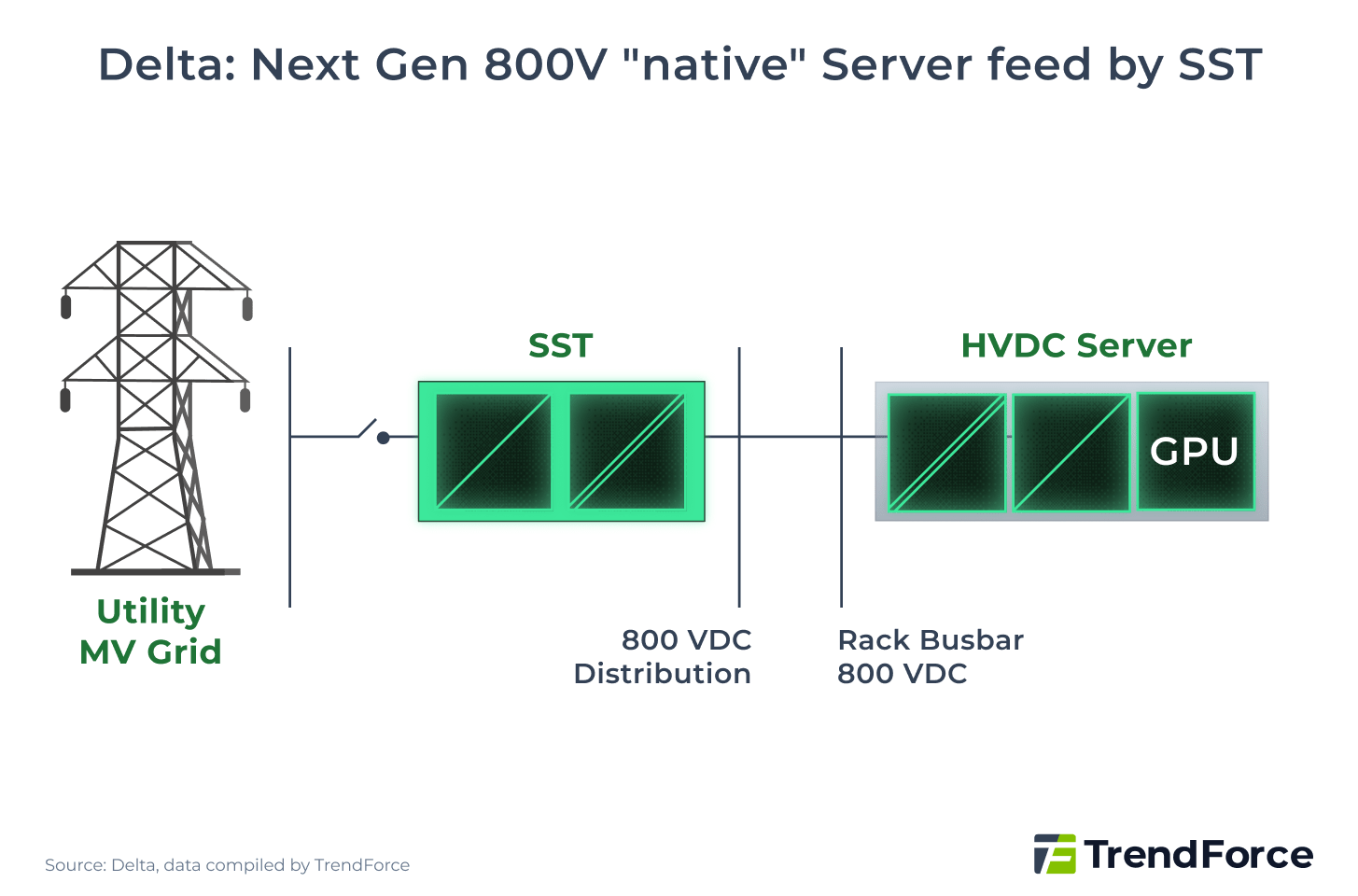

- NVIDIA 800V Monopolar DC Architecture

NVIDIA’s 800V monopolar DC architecture is designed to meet MW-scale AI rack demands. AC power is converted to 800 VDC at the substation via SST and directly powers native 800V servers, increasing transmission capacity by 85% over the same cabling. By 2028, widespread SST adoption is expected to enable a complete end-to-end HVDC architecture, maximizing efficiency and simplifying management.

HVDC Era Reshapes Data Center Power Supply Chain

The surge in AI compute demand is driving data centers toward HVDC (high-voltage direct current) power architectures, creating a new landscape in the related supply chain.

- Data Center Power Infrastructure: Vendors such as Schneider Electric, Eaton, and Vertiv dominate, providing critical technologies for high-voltage switches, busways, and solid-state transformers (SSTs), ensuring safe HVDC deployment and reliable energy quality.

- Power System Components: Vendors such as Delta Electronics, LITEON Technology, and Flex lead this segment, covering DC distribution and energy conversion from the server hall to IT racks. Key components include power racks, BBUs, supercapacitors, and busbars, enabling high-efficiency power delivery and stable operation.

- Power Semiconductor Solutions: Vendors such as Infineon, Renesas, ROHM, Onsemi, and TI leverage third-generation semiconductors like SiC and GaN to produce high-performance, high-density kilovolt-class switching devices, defining the performance limits of HVDC architectures.

Looking ahead, the HVDC trend will drive greater vertical integration across the ecosystem, blurring traditional supply chain boundaries. Taiwanese companies have formed a comprehensive local cluster, covering power modules, backup systems, thermal management, and rack integration. This enables rapid delivery of MW-class HVDC solutions, positioning Taiwan as a key hub in the global HVDC ecosystem and providing data center operators worldwide with timely competitive advantages.