Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] Samsung Unveils CXL Roadmap: CMM-D 2.0 Samples Ready, 3.1 Targeted for Year-End

At the 2025 OCP Global Summit, Samsung not only highlighted its HBM4e advancements—promising per-pin speeds above 13 Gbps and a peak throughput of 3.25 TB/s—but also unveiled its groundbreaking CXL Memory Module (CMM) roadmap, according to TechNews.

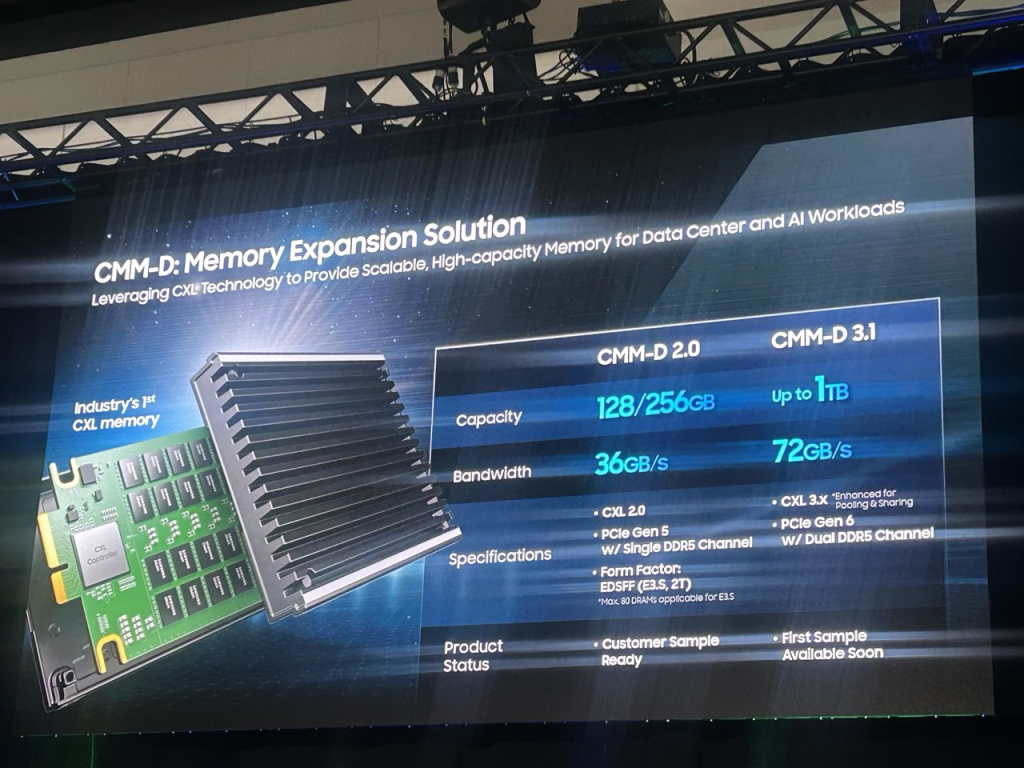

Notably, TechNews reports that Samsung is aggressively expanding its CMM roadmap, with the CMM-D series set for a flagship launch in 2025. The CMM-D 2.0 offers 128 GB and 256 GB capacities, up to 36 GB/s bandwidth, CXL 2.0 compliance, PCIe Gen 5 support, and single DDR5 channel compatibility. Customer samples are reportedly already available.

As Samsung explains, its CMM-D, powered by next-gen CXL 2, expands memory by linking multiple processors and devices. According to Samsung, CMM-D seamlessly integrates with existing local DIMM(Dual In-line Memory Module)s, enhancing memory capacity by up to 50% and boosting bandwidth by up to 100%. By overcoming infrastructure expansion limitations, CMM-D helps lower the total cost of ownership (TCO), providing an efficient solution for your business needs, the company states.

TechNews adds that while Samsung’s current CMM-D 1.1 includes the industry’s first CXL solution using open-source firmware, CMM-D 2.0 focuses on building learning platforms and providing comprehensive solutions.

Building on its CMM roadmap, TechNews reports that Samsung is developing CMM-D 3.1, aiming for long-term technological advancement with design-in expected by year-end. The new module is set to scale up to 1 TB, deliver 72 GB/s bandwidth, and support CXL 3.0 optimized for pooling and sharing. The interface will also upgrade to PCIe Gen 6 with dual DDR5 channels, with initial samples reportedly coming soon, the report notes.

Memory Giants Gear Up for CXL Race

As the Chosun Daily highlights, with the HBM market heating up, leading chipmakers are extending their AI semiconductor strategies to incorporate Compute Express Link (CXL), a next-generation memory interface.

The report further explains that AI servers typically combine CPUs, GPUs, and DRAM, but CXL streamlines data transfer across these components. By delivering higher performance with fewer chips, it helps cut infrastructure costs, the report adds.

As the current HBM leader, SK hynix completed customer validation for a 96 GB DDR5 DRAM module based on the CXL 2.0 standard in late April. The company says the module delivers 50% more capacity and 30% higher bandwidth than standard DDR5 in servers, and it is also advancing validation of a 128 GB version.

Notably, as reported by the bell, SK hynix is incorporating chiplet technology into its first in-house CXL controller, which will reportedly be manufactured by TSMC.

According to the report, SK hynix applied the CXL controller from China’s Montage Technology in its CMM-DDR5 in 2024. Now it prepares to produce its own CXL controllers for CXL 3.0 and 3.1., the report adds.

On the other hand, the Chosun Daily notes that in a bid to catch up with Samsung and SK hynix, Micron Technology, the world’s third-largest memory chipmaker, began deploying CXL 2.0 memory expansion modules last year.

Micron Technology, the world’s third-largest memory chipmaker, began rolling out CXL 2.0-based memory expansion modules last year, intensifying its push to close the technological gap with Samsung and SK hynix.

Read more

(Photo credit: Samsung)