What Is AI Infrastructure?

AI Infrastructure, also known as the AI Stack, comprises software and hardware resources that support the development, training, and deployment of Artificial Intelligence (AI) and Machine Learning (ML) applications.

Built to handle the large-scale computing and data processing required for AI projects, it features high performance, flexibility, and scalability. This helps enterprises accelerate the implementation of AI solutions, enhancing operational efficiency and market competitiveness.

AI Infrastructure vs. IT Infrastructure

Both AI infrastructure and traditional IT infrastructure share computing, storage, and networking resources, but their design philosophies and application goals are fundamentally different.

Traditional IT infrastructure supports daily business operations, handling general computing needs such as ERP, databases, and office applications. In contrast, AI infrastructure is a new architecture accommodating emerging workloads and specialized technology stacks like deep learning and generative AI. It requires higher hardware and has a completely different architectural design and supporting software ecosystem.

AI infrastructure is not merely an upgrade of existing IT systems; it requires a comprehensive overhaul of technical architecture, organizational operations, and resource allocation.

“Every industrial revolution begins with infrastructure. AI is the essential infrastructure of our time, just as electricity and the internet once were.”

— Jensen Huang, Founder and CEO of NVIDIA, NVIDIA Newsroom, June 11, 2025

Cloud Giants' Investment in AI Infrastructure

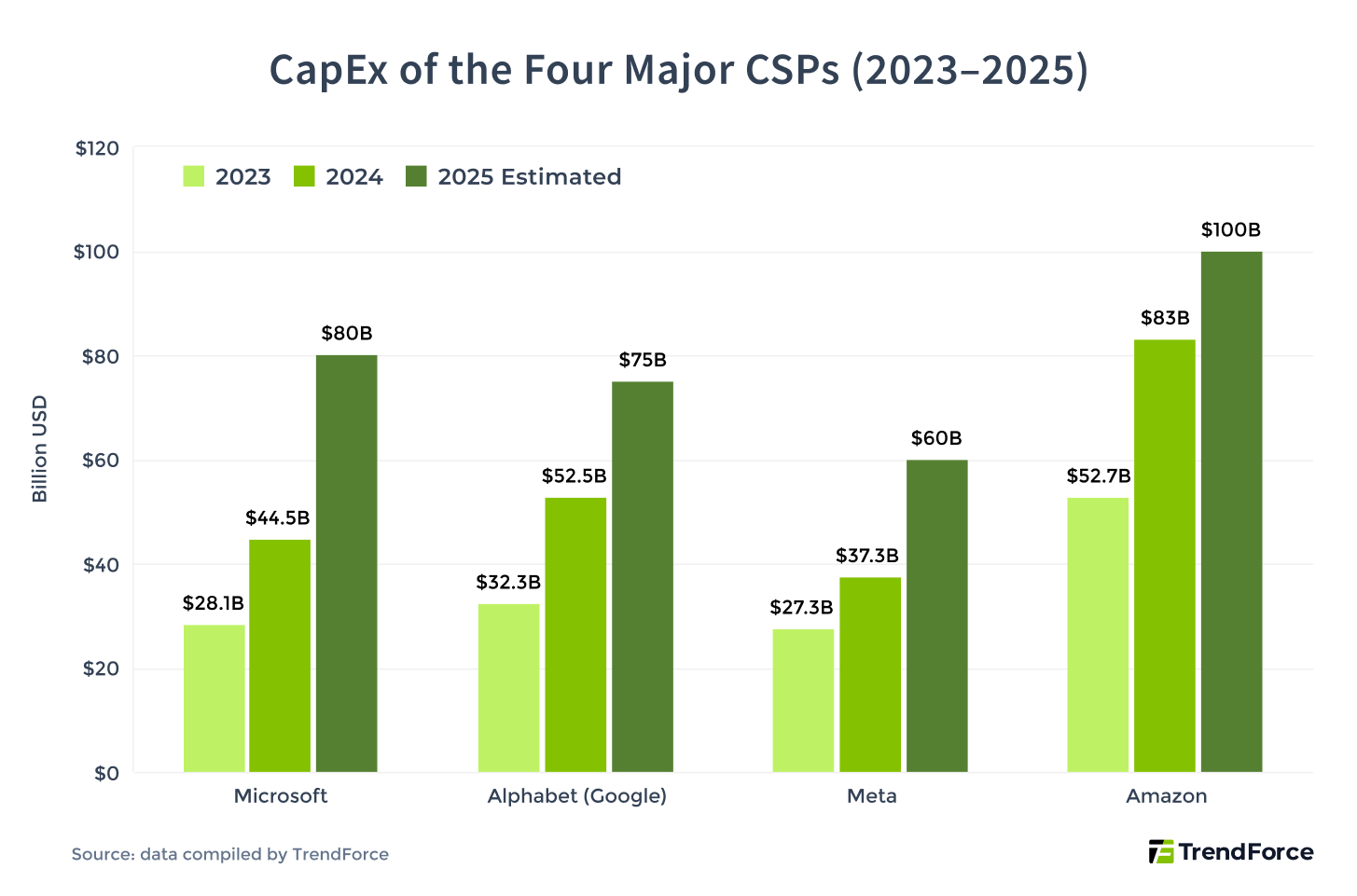

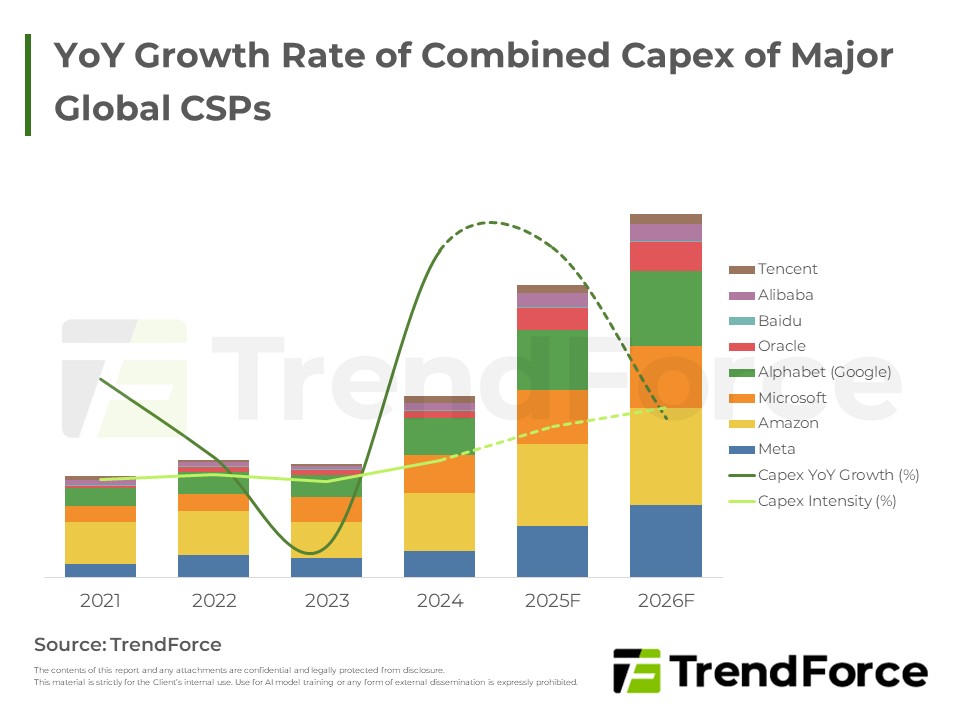

The global AI wave is accelerating the development of AI infrastructure. Microsoft, Alphabet (Google), Meta, and Amazon, the four major North American cloud service providers (CSPs), have invested heavily in cloud computing in recent years.

In the 4th quarter of 2024, although these CSPs’ cloud computing businesses saw positive growth, the growth rate generally slowed and did not meet market expectations. At the beginning of 2025, they faced challenges posed by low-cost AI models led by DeepSeek. However, the four CSPs embraced competition and have increased their CapEx in this area this year.

Note: The following CapEx data is as of June 2025.

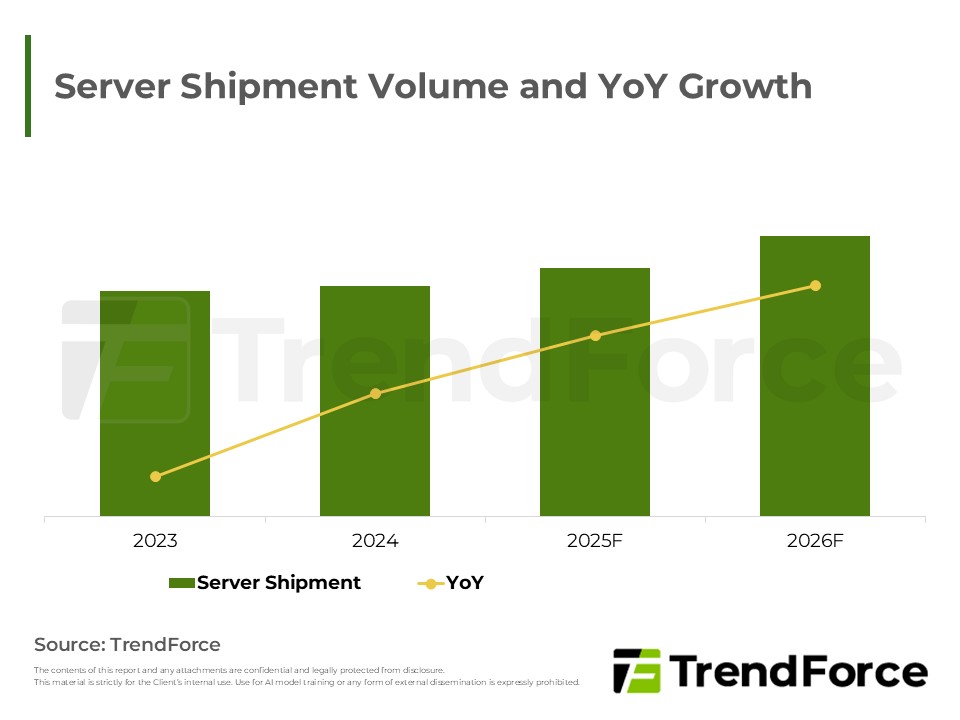

CSP CapEx Fuels 12.8% Server Growth: 2026 Forecast

CSPs are ramping up CapEx as inference drives faster server refresh and deployment cycles. Global server shipments are expected to grow up to 12.8% in 2026, highlighting AI-led data center upgrades.

Explore Server Market TrendsMajor CSPs Increase CapEx to Dominate AI Stacking

The generative AI boom continues to drive business momentum. Despite short-term revenue pressures and the rise of low-cost AI, major CSPs are increasing CapEx to strengthen cloud and partnership ecosystems, demonstrating strong confidence in the long-term potential of AI infrastructure.

- Microsoft plans to reach $80 billion in CapEx by 2025FY, focusing on expanding AI data centers, chips, and models, and enhancing its collaboration with OpenAI. However, the company also indicated it might slow down or adjust plans in certain areas.

- Alphabet is raising its CapEx from $52.5 billion in 2024 to $75 billion this year, accelerating investments in data centers and self-developed AI chips like TPU. This investment wave will drive development of its cloud platform (Google Cloud), AI development platform (Vertex AI), AI models (Gemini), and autonomous vehicle product Waymo.

- Meta expects CapEx of $60 to $65 billion this year, focusing on building large-scale AI data campuses and enhancing model training platforms. It is also actively developing its partnership ecosystem, to establish future advantages through AI infrastructure. In April, it launched the Llama 4 series models, offering high deployment flexibility, making it easier for enterprises to deploy their own or hybrid AI applications.

- Amazon plans to increase its CapEx from $75 billion in 2024 to $100 billion this year, continuing to build AI data centers and AWS infrastructure and services. It is also strengthening development of Trainium chips and Nova models, aggressively capturing the AI computing resources market.

AI infrastructure has become the core of resource competition among tech giants. From hardware development to model services, major CSPs are vying for the next round of market dominance, continuously reshaping the global cloud and AI ecosystem.

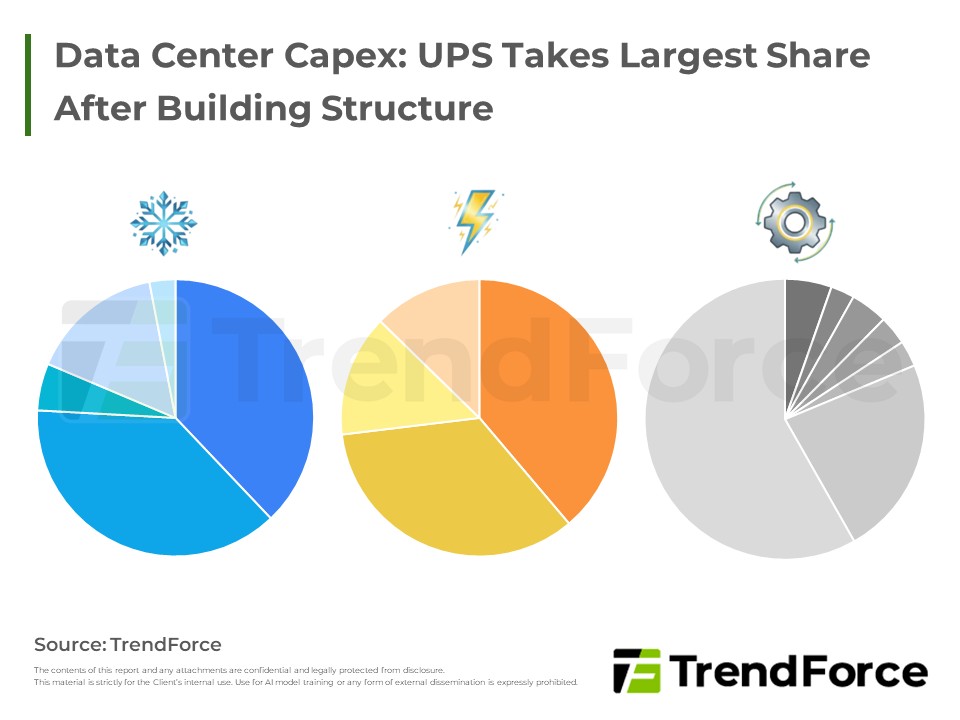

AI Data Center Capex Breakdown and Future Outlook

TrendForce breaks down global hyperscale AI data center capex into physical infrastructure, IT computing, and networking, offering insights into leading players’ strategies and helping companies stay ahead.

Access AI Market Intelligence3 Key Strategies for Successfully Driving AI Infrastructure

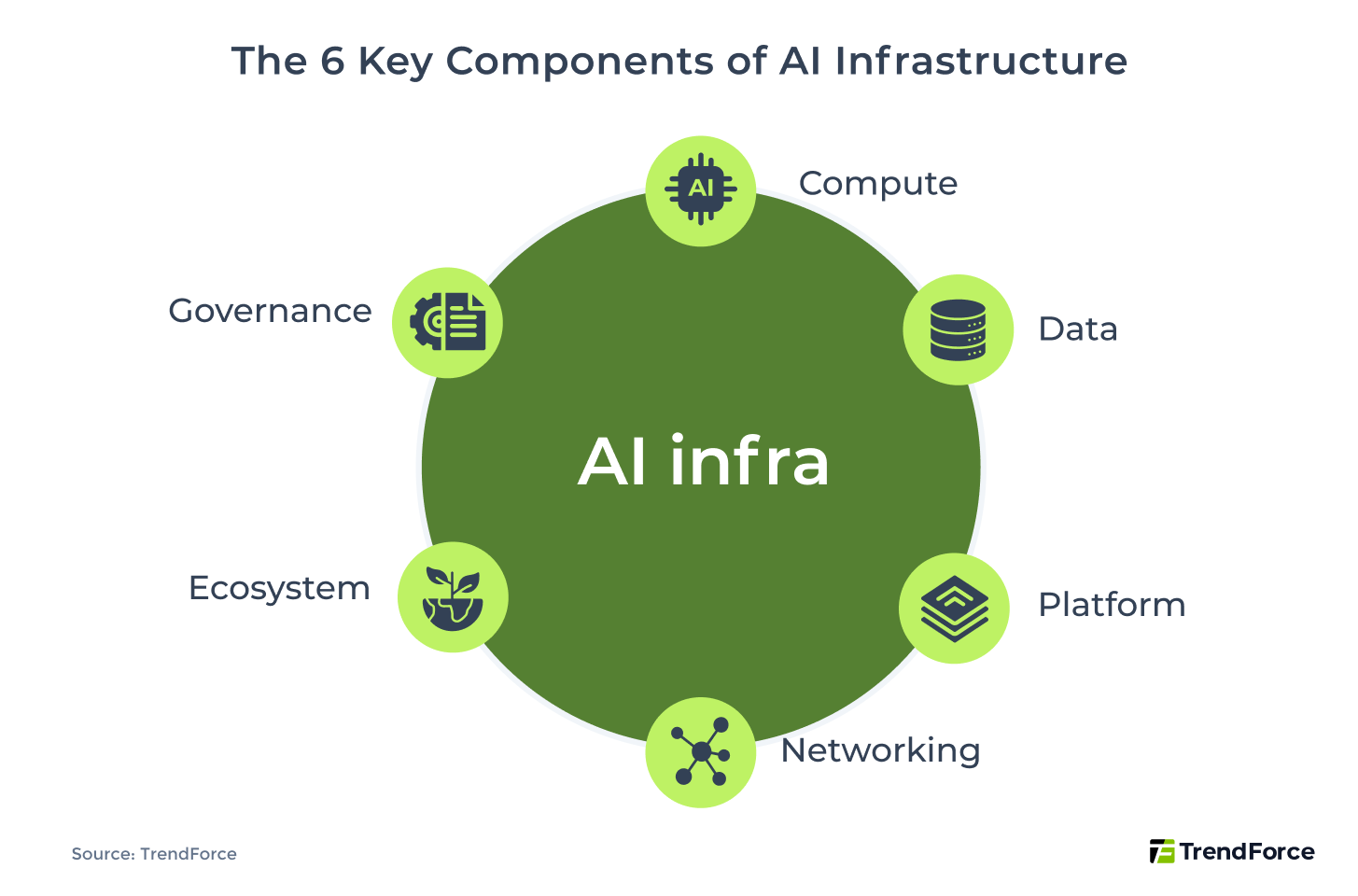

Before discussing AI infrastructure strategies, it's essential to understand its 6 core components: Compute, Data, Platform, Networking, Ecosystem, and Governance. These elements form a complete architectural stack and work together, serving as the foundation for driving AI solutions in enterprises:

- Compute: The Brain of AI

Sufficient compute power determines the speed, scale, and responsiveness of AI model training and deployment. It primarily consists of servers equipped with AI accelerators such as GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), acting as the core engine for machine learning operations. - Data: The Lifeblood of AI

Data shapes how well AI models perform and the business value they can generate. AI infrastructure must support massive data handling across both training and inference stages, enabling efficient data collection, high-speed storage (e.g., data lakes), cleansing, and secure management to help models learn from high-quality datasets. - Platform: The Skeleton and Organs of AI

Serving as the bridge between compute and data, platforms provide the integrated environment needed to develop and deploy AI solutions. A robust and user-friendly platform can dramatically lower technical barriers, accelerate time-to-value from experimentation to production, and enhance resource allocation efficiency. - Networking: The Nervous System of AI

Networking connects data, compute, and platforms, ensuring that information flows rapidly and systems respond in real time. Without a stable, high-speed network, AI models cannot function properly—leading to performance bottlenecks, increased latency, and even impacting user experience. - Ecosystem: The Social Network of AI

An AI ecosystem includes both internal and external technology partners and tool providers that help enterprises accelerate adoption and reduce risks. By collaborating with the suitable partners, organizations can avoid building everything in-house and instead focus on delivering differentiated value. - Governance: The Steward of AI

Governance ensures that AI systems operate securely, ethically, and in compliance with regulations. It encompasses policy management for data and models, security protocols, and risk controls related to AI ethics, helping organizations mitigate legal and reputational risks while building long-term trust and sustainability.

For enterprises, building a strong AI infrastructure is not just a sign of technological leadership. It also plays a critical role in unlocking business value and enhancing competitiveness. Let’s now explore the 3 key strategies that enable successful AI infrastructure implementation.

1. Goal-Driven (Why): Business-Oriented AI Investment Alignment

A successful AI infrastructure investment must be guided by clear, measurable business objectives and return on investment (ROI). The focus should be on solving critical business challenges and uncovering new growth opportunities. This can include improving customer experience to drive revenue, or accelerating product development to reduce operational costs. Every infrastructure investment should be closely tied to the business value it is expected to generate.

Take TrendForce as an example. In the rapidly evolving tech market, staying ahead requires faster research turnaround and deeper analysis. To meet this need, TrendForce built its own AI infrastructure and generative models. According to data from its “Data Analytics Division”, analysts using these models improved research efficiency by an average of 60%. They also integrated a broader range of data sources, enabling more comprehensive industry insights and ensuring clients receive timely and critical market intelligence.

2. Resource Allocation (How, Where): Building Flexible and Controllable AI Infrastructure

To ensure AI infrastructure investments are effective, organizations must take a goal-driven approach when allocating technical, human, and financial resources. Collaborating within the ecosystem can reduce the burden of development, enabling faster deployment of AI applications while maintaining long-term innovation capabilities.

Technology Selection and Deployment Strategies

AI technology resources should be aligned with the organization’s application goals and operational realities. Key factors include data sensitivity, model complexity, and future scalability. Successful enterprises often take a phased approach—starting with a pilot project, then expanding investment based on proven results to minimize risks and maximize resource efficiency.

The table below outlines 3 common deployment models, helping organizations determine “where” and “how” to build AI infrastructure based on cost, security, and flexibility requirements:

| Deployment Model | Description | Investment & Control | Best Use Cases |

|---|---|---|---|

| Cloud-First | Relies on cloud services and pre-built models; fast and flexible | Low | Limited resources; need for rapid prototyping |

| Hybrid Model | Combines cloud and on-premises resources; balances flexibility and control | Medium | Data sensitivity; balancing cost and performance |

| On-Premise Optimized | Fully self-managed infrastructure; maximizes control and performance | High | High security demands; deep AI-business integration |

Once a deployment model is selected, organizations should strengthen their core technology foundation—compute, data, platform, and networking. A balanced infrastructure ensures your AI delivers results at scale and with consistency.

At the same time, building a collaborative ecosystem is key to accelerating deployment. Partnering with cloud platforms, AI chip providers, open-source communities, and domain experts can significantly reduce time-to-implementation and mitigate development risks.

Organizational Collaboration and Talent Strategy

AI projects often span multiple departments and functions. To ensure alignment, organizations must establish a flat communication structure fostering close collaboration among technical teams, such as AI engineers and infrastructure specialists, and business units. This helps clarify requirements, define problems effectively, and continuously refine solutions.

At the same time, project roles and responsibilities should be restructured around a “business-led, tech-supported” model. Each stakeholder must have clear goals and deliverables to prevent disconnects between business needs and technical execution.

In addition, companies should invest in employee training and development to help teams stay current with evolving AI technologies. Building a skilled in-house AI team improves both implementation success and the organization’s ability to adapt and scale AI initiatives over time.

Full Lifecycle Financial Planning

When planning AI infrastructure, organizations should consider more than just initial hardware purchases or cloud subscription fees. A lifecycle-based approach is essential for systematically evaluating the overall cost structure. This includes:

- Capital expenditures such as data center construction and investments in AI accelerators.

- Operating expenses including cloud service fees, electricity, network usage, software licenses, and system maintenance.

- Hidden costs such as talent recruitment and training, long-term data management, system integration complexity, and potential risk mitigation.

Evaluating these cost elements helps organizations plan budgets more accurately and make informed investment decisions.

Global AI Server Trend for 2025: CSPs Expediting Investment and In-House ASICs

Cloud providers lift AI CapEx to new highs; rack-scale GPU and custom ASICs rise, liquid cooling spreads. Essential for staying ahead in the industry.

Access AI Market Intelligence3. Risk Management (What If): Security, Compliance, and Sustainable Governance

Forward-looking risk governance is essential for ensuring the long-term stability of AI infrastructure. Organizations should strengthen risk management across 3 key areas:

- Security: Protect the confidentiality and integrity of AI data and models throughout their lifecycle, preventing unauthorized access and malicious attacks.

- Compliance: Align with legal and ethical standards by establishing transparent, fair, and traceable AI governance frameworks that minimize regulatory risks.

- Sustainability: Incorporate carbon emissions, energy efficiency, and long-term maintenance costs into planning to ensure alignment with ESG goals and responsible technology development.

The Rise of Low-Cost Models Is Reshaping AI Infrastructure

In early 2025, China’s DeepSeek and similar models sent shockwaves through the AI industry, accelerating infrastructure expansion. Reports have circulated that training these models can cost as little as $30 to $50. However, most assessments suggest these figures refer to partial or final training costs under conditions of mature technology and lower hardware prices. They do not account for the full cost of infrastructure development, data preparation, or initial system setup.

Low-cost AI models are designed to reduce newcomers' entry barriers by minimizing resource consumption, development time, and operating costs. These models often achieve cost efficiency through architectural optimization and more effective data utilization. DeepSeek, for example, uses a mixture-of-experts design that combines shared and routing experts. It applies load balancing without auxiliary loss, enabling more efficient use of computing resources without sacrificing performance.

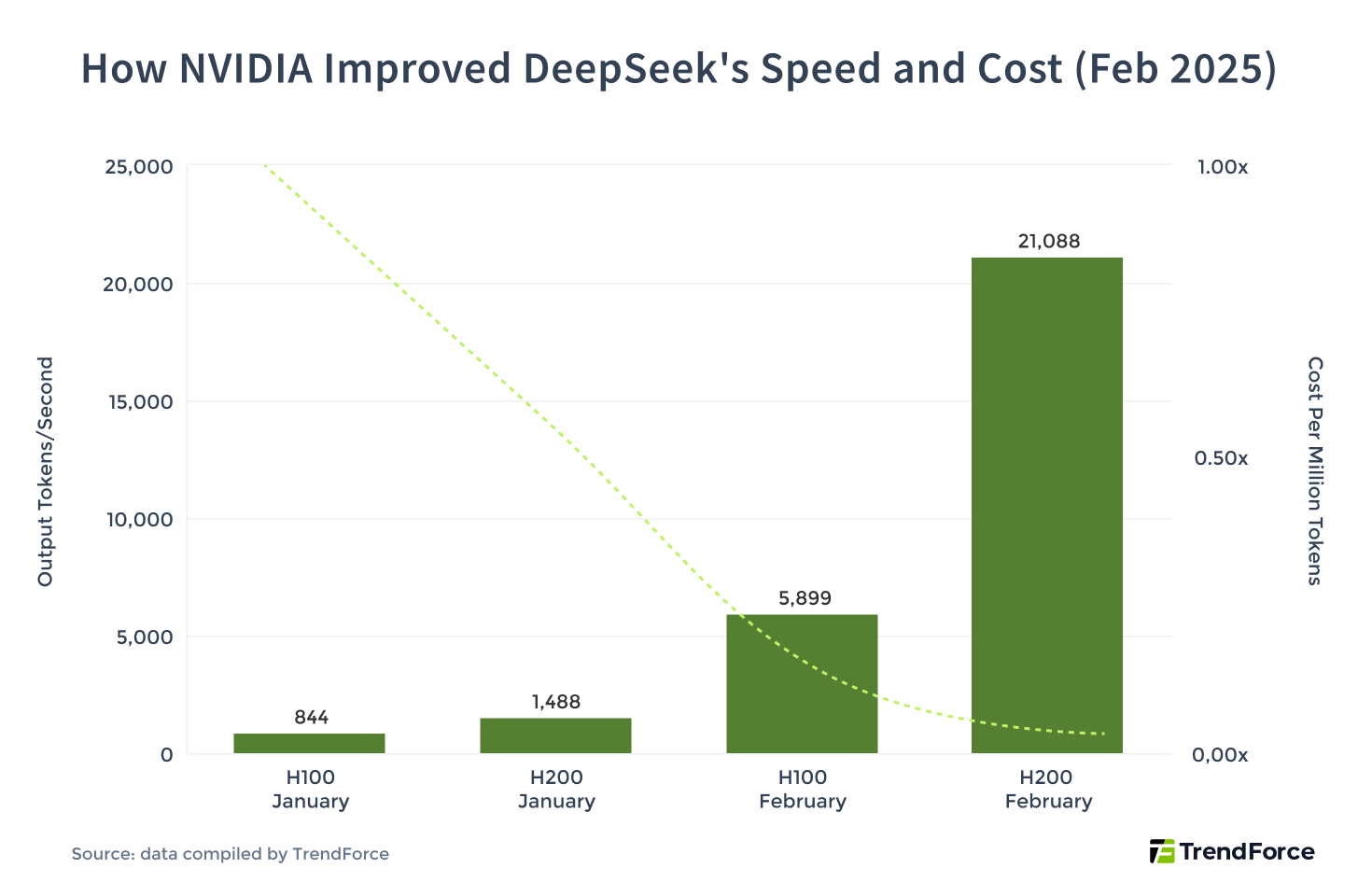

In February 2025, NVIDIA open-sourced the first version of the DeepSeek-R1 model optimized for its Blackwell architecture. Inference speed increased by 25 times compared to January, while the cost per token dropped by a factor of 20, marking a major leap in both performance and cost control.

While questions remain about the return on investment in AI infrastructure, major cloud providers have continued to ramp up capital spending in 2025. Many are also developing custom AI chips—ASICs to strengthen their competitive edge and national positioning. In the long run, high-precision AI services remain essential across industries. Their value cannot be fully replaced by low-cost models, underscoring the need for sustained investment.

2026 Outlook: CSP & NVIDIA Drive Arm Server Rise

CSP self-developed chips and NVIDIA's platforms are driving Arm adoption, eroding x86 dominance in server markets.

Access Market IntelligenceHow to Plan for the Next Wave of AI Infrastructure

This year marks a major shift in the AI landscape. The emergence of low-cost AI models offers an alternative to traditionally capital-intensive approaches and signals a turning point for the industry. This trend is expected to drive broader AI adoption and accelerate real-world applications across sectors.

As a result, organizations are placing greater focus on optimizing existing AI infrastructure through software and algorithmic improvements to enable more cost-efficient AI product development. This also helps reduce the cost for end users.

Going forward, the market is likely to see a dual-track approach: proprietary, high-precision, closed-source models developed by leading companies, alongside a growing ecosystem of low-cost, open-source models that offer greater flexibility and accessibility. Each organization can choose the path that best fits its strategic and operational needs.