Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] OpenAI Reportedly Discontent With NVIDIA GPUs for Inference; Groq, Cerebras Gain Attention

As AI workloads increasingly shift toward inference, OpenAI may be reassessing its satisfaction with NVIDIA’s chips. According to Reuters, sources say OpenAI believes NVIDIA’s latest AI processors may not fully meet its requirements and has been exploring alternative options since last year. OpenAI is said to be seeking new hardware that could ultimately supply around 10% of its inference computing requirements in the future.

NVIDIA continues to dominate chips used for training large AI models, but inference is emerging as a new competitive battleground. As the report notes, the reported move by OpenAI and other players to explore alternatives in the inference chip market represents a test of NVIDIA’s leadership in AI hardware.

Still, this appears less an immediate revenue risk for NVIDIA than an indication that the next phase of AI competition will be defined by performance per dollar and response latency, rather than raw training capability alone, as InvestingLive notes.

As part of the broader context, the proposed deal between the two companies has encountered delays, according to Reuters. NVIDIA said in September it planned to invest up to $100 billion in OpenAI in exchange for an equity stake, providing funding for advanced AI chip purchases. The deal had been expected to close within weeks, but negotiations have stretched on for months.

How Inference Is Reshaping AI Chip Preferences

As Reuters highlights, OpenAI’s search for GPU alternatives since last year has focused on companies developing chips that embed large amounts of memory within the same piece of silicon as the rest of the processor, using SRAM.

Reuters explains that inference demands greater memory capacity than training, as chips spend a relatively larger share of time retrieving data from memory rather than performing calculations. By contrast, NVIDIA and AMD GPUs rely on external memory, which introduces additional latency and can slow user interactions with chatbots.

Within OpenAI, the issue was especially evident in Codex, the company’s code-generation product. According to sources cited in the report, internal teams attributed some of Codex’s performance limitations to NVIDIA’s GPU-based hardware.

By comparison, rival offerings such as Claude from Anthropic and Gemini benefit from deployments that lean more heavily on Tensor Processing Units—custom chips developed in-house by Google. As Reuters notes, TPUs are optimized for inference-focused workloads and can deliver performance advantages over more general-purpose AI chips, such as NVIDIA GPUs.

NVIDIA’s Next Move in the Inference Chip Race

Against this backdrop, NVIDIA approached companies developing SRAM-heavy chips, including Cerebras and Groq. Cerebras declined and instead struck a commercial deal with OpenAI announced last month, Reuters notes.

Groq had also held talks with OpenAI to provide computing capacity and had drawn investor interest at a valuation of roughly $14 billion. However, by December, NVIDIA moved to license Groq’s technology in a non-exclusive, all-cash deal. While the arrangement allows other firms to license the technology, Groq has since shifted its focus toward cloud-based software after NVIDIA hired away its chip designers, Reuters adds.

Read more

- [News] NVIDIA’s $20B Groq Deal Spotlights SRAM Shift—MediaTek NPU Already On Board

- [News] NVIDIA’s OpenAI Mega-Deal: AI Boom Proof or Customer Bailout Amid Antitrust and Conflict Concerns?

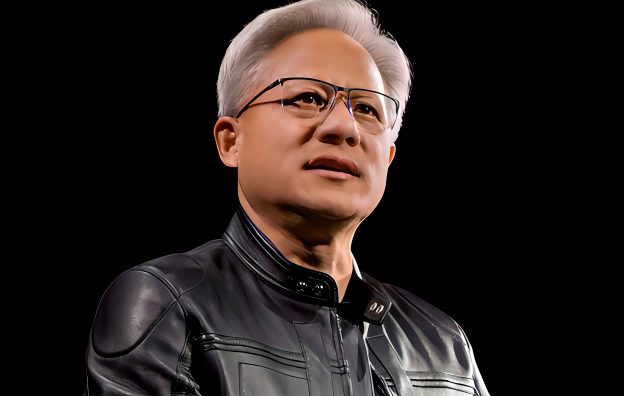

(Photo credit: NVIDIA)