Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] China Steps Up AI Push: Alibaba Latest Qwen Model Nears U.S. Rivals; Moonshot Advances

Almost a year after DeepSeek jolted the global AI industry, Chinese developers have accelerated the rollout of new models. According to South China Morning Post, Alibaba and Moonshot AI have both rolled out new flagship AI models, further closing the gap with U.S. leaders such as OpenAI and Google DeepMind. As the report notes, Alibaba describes Qwen3-Max-Thinking as its strongest model to date, while Moonshot positions Kimi K2.5 as the world’s most powerful open-source model.

Alibaba Advances Qwen3 Series With Trillion-Parameter Flagship

Alibaba Cloud recently unveiled its largest model to date, Qwen3-Max-Thinking, saying it offers stronger agentic capabilities. The model is now available via Alibaba’s cloud services platform and chatbot website. The company said internal tests showed “comparable” performance to leading U.S. models, including Anthropic’s Claude-Opus-4.5 and Google DeepMind’s Gemini 3 Pro, across 19 benchmarks.

As the report notes, Qwen3-Max-Thinking is the latest release in the Qwen3 series, which debuted in May with models ranging from 600 million to 235 billion parameters and has since expanded to versions exceeding one trillion parameters.

Even so, the report adds that some Qwen users expressed disappointment that the model was not open-sourced. While Qwen is among the world’s most popular open-source model families, Alibaba has so far kept its largest Max models closed and uses them to power its Qwen app, the report adds.

Moonshot AI and Zhipu Roll Out New Flagship Models

In addition to Alibaba’s Qwen models, Alibaba-backed start-up Moonshot AI also rolled out Kimi K2.5. Bloomberg notes that the latest version can process text, images, and video from a single prompt, in line with the shift toward omni models pioneered by OpenAI and Alphabet’s Google. Moonshot is also introducing an automated coding tool designed to compete with Anthropic’s Claude Code.

Separately, Bloomberg notes that Zhipu rolled out its image-generation model, GLM-Image, in January, saying it is China’s first model to be fully trained on domestically produced chips

Scaling Ambitions Meet Computing Constraints

Underlying these developments, South China Morning Post points out that both Moonshot and the Qwen team are proponents of AI scaling laws—the belief that expanding compute and data drives consistent performance gains. Reflecting this approach, the report notes that the Qwen team said in a September roadmap it plans to scale its models beyond 10 trillion parameters to keep pace with U.S. peers already working at similar sizes.

However, South China Morning Post adds that Qwen team leader Lin Junyang said at an industry event that computing constraints remain a key challenge, as resources are increasingly tied up in day-to-day delivery rather than research and development.

Read more

- [News] Chinese AI Models Reportedly Hit ~15% Global Share in Nov. 2025, Fueled by DeepSeek Open-Source Push

- [News] Alibaba T-Head Unveils New AI Chip Said to Match NVIDIA H20 as IPO Speculation Builds

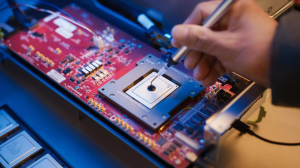

(Photo credit: Alibaba)