Popular Keywords

- About Us

-

Research Report

Research Directory

Semiconductors

LED

Consumer Electronics

Emerging Technologies

- Selected Topics

- Membership

- Price Trends

- Press Center

- News

- Events

- Contact Us

- AI Agent

About TrendForce News

TrendForce News operates independently from our research team, curating key semiconductor and tech updates to support timely, informed decisions.

- Home

- News

[News] DeepSeek Moves Closer to NVIDIA H200 Chips as China Reportedly Signals Conditional Approval

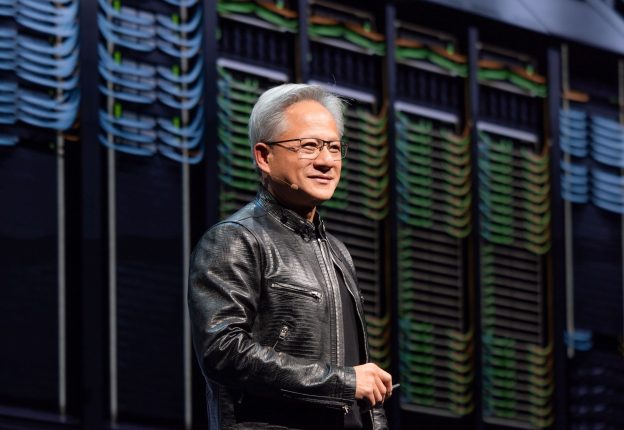

As market buzz suggests DeepSeek plans to unveil its next-generation AI model over the Lunar New Year holiday, China has reportedly given the leading startup conditional approval to acquire NVIDIA’s H200 AI chips, though the regulatory terms are still being finalized, Reuters reports.

DeepSeek might not be alone in approaching approval, as Reuters previously reported that other Chinese tech giants—including ByteDance, Alibaba, and Tencent—have been authorized to collectively purchase more than 400,000 H200 chips. Reuters adds China’s industry and commerce authorities have reportedly granted approvals for all four companies, but with conditions that are still being finalized.

One source cited by the report further noted that the specific terms are being determined by China’s state planner, the National Development and Reform Commission (NDRC).

However, for now, NVIDIA has not received any H200 orders from China, Bloomberg reported on the 29th. Citing CEO Jensen Huang in Taipei, the report noted that shipments remain on hold as Beijing continues to weigh whether to allow imports of the U.S. company’s AI chips.

Notably, Reuters also highlights that any H200 purchases by DeepSeek could face scrutiny from U.S. lawmakers, as a senior U.S. legislator alleged in a letter to Commerce Secretary Howard Lutnick that the U.S. chipmaker had assisted DeepSeek in refining AI models later used by the Chinese military.

DeepSeek’s Upcoming V4 Launch

According to The Information, DeepSeek is set to unveil its next-generation AI model, V4, in mid-February, featuring enhanced coding capabilities.

As per Jiemian, DeepSeek’s V4 large AI model—whose coding capabilities are expected to rival and potentially surpass closed-source models like Claude and the GPT series—marks a major leap forward in code generation, long-context comprehension, and training stability. By harnessing the new mHC architecture and the Engram conditional memory module, the model aims to cut training costs while easing GPU memory bottlenecks, potentially overcoming the limitations of domestic AI chips’ HBM and speeding up the growth of China’s local computing ecosystem, Jiemian adds.

Read more

- [News] Trump Targets NVIDIA H200, AMD MI325X with 25% Tariff; U.S. Infrastructure Spared

- [News] NVIDIA Reportedly Tightens H200 Payment Terms Despite Possible Q1 Approval from China

(Photo credit: NVIDIA)