Era of Machine Data: Analysis on Training and Inference Applications of AI Servers

Last Modified

2023-05-08

Update Frequency

Not

Format

Summary

The expectation that AI would introduce immense benefits to the commercial market has prompted major suppliers to engage in AI training, which yielded the demand for hardware equipment. AI is currently at the mass training phase, and applications of AI servers are still focused on training, where GPU and GPGPU serve as the cores, while ASIC and FPGA facilitate inference through specific algorithms that will improve overall computing performance.

Table of Contents

1. Perpetual AI Training under Era of Machine Data

(1) Existing AI Servers Primarily Focused on Training

(2) FPGA and ASIC Could Assist with Inference

2. Analysis on Architectures and Trends of AI Servers

(1) GPU Serves as the Core for AI Servers

(2) ASIC and FPGA to Accelerate Inference Applications

(3) Mass Data Prompts Storage Demand

(4) DPU Emerges to Strengthen Resource Allocation

(5) High Energy Consumption Amplifies Demand for Cooling

3. TRI’s View

(1) Fast Iterations of AI Models Actuates Advancement of Server Modules

(2) AI Servers Currently Focused on Training with GPU Serving as a Key Factor

<Total Pages:12>

Category: Computer System

Spotlight Report

-

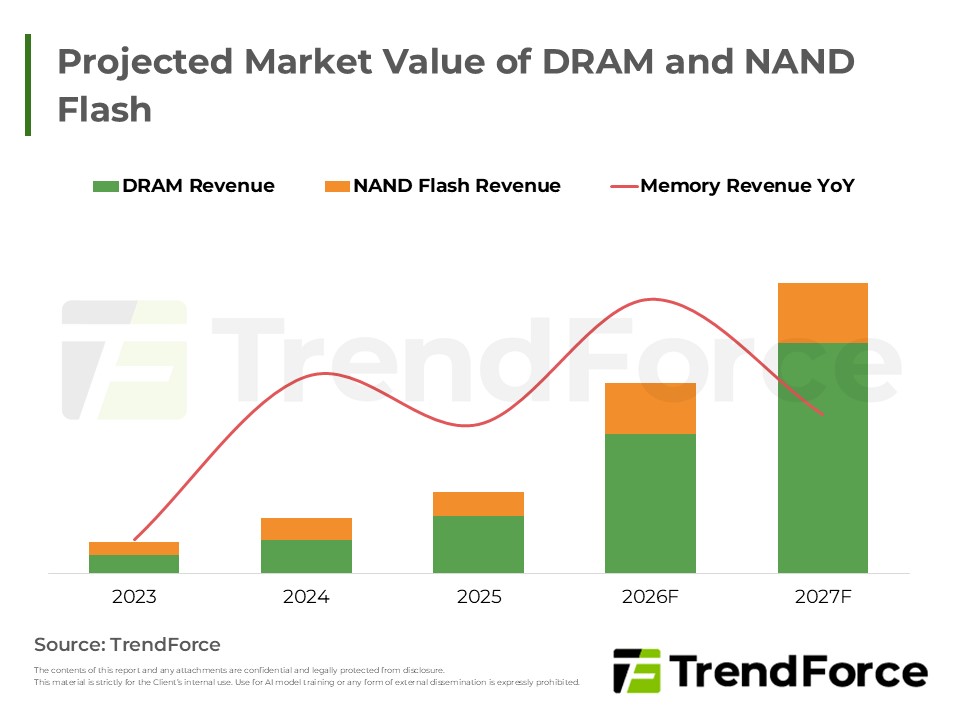

AI Reshapes Memory: Market Revenue to Peak by 2027

2026/01/20

Selected Topics

PDF

-

DRAM/NAND Flash 2026 Capex: AI-Driven Revisions, Capacity Limited

2025/11/07

Selected Topics

PDF

-

High Server DRAM Demand Drives Expansion by Major Suppliers

2025/11/24

Selected Topics

PDF

-

CSP CapEx Fuels 12.8% Server Growth: 2026 Forecast

2025/12/18

Selected Topics

PDF

-

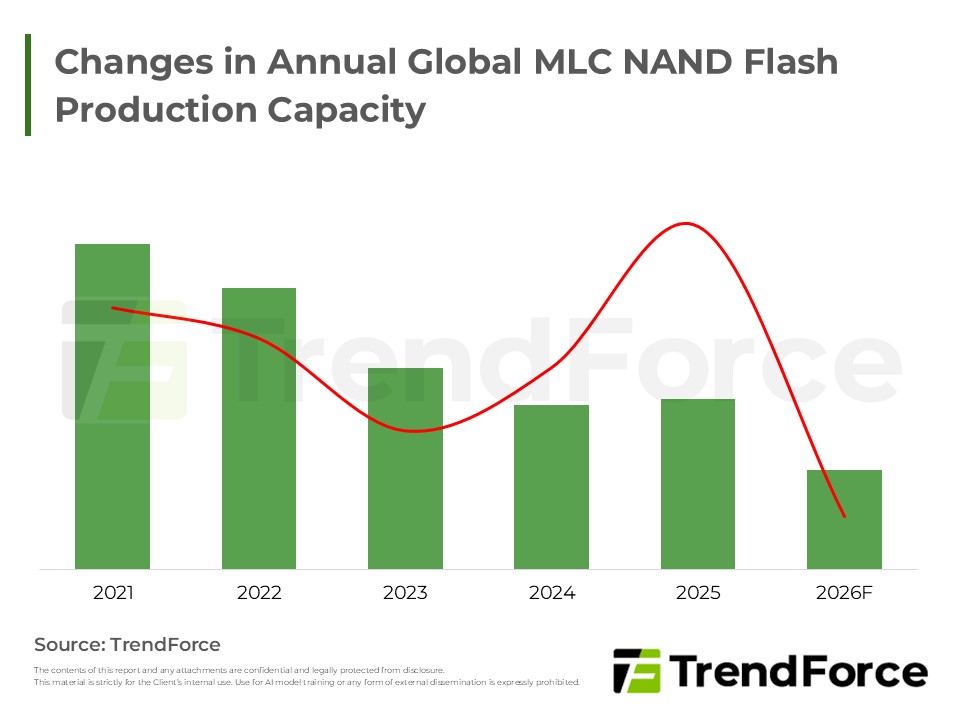

MLC Supply Cliff: Majors Exit & MXIC's Gain

2026/01/06

Selected Topics

PDF

-

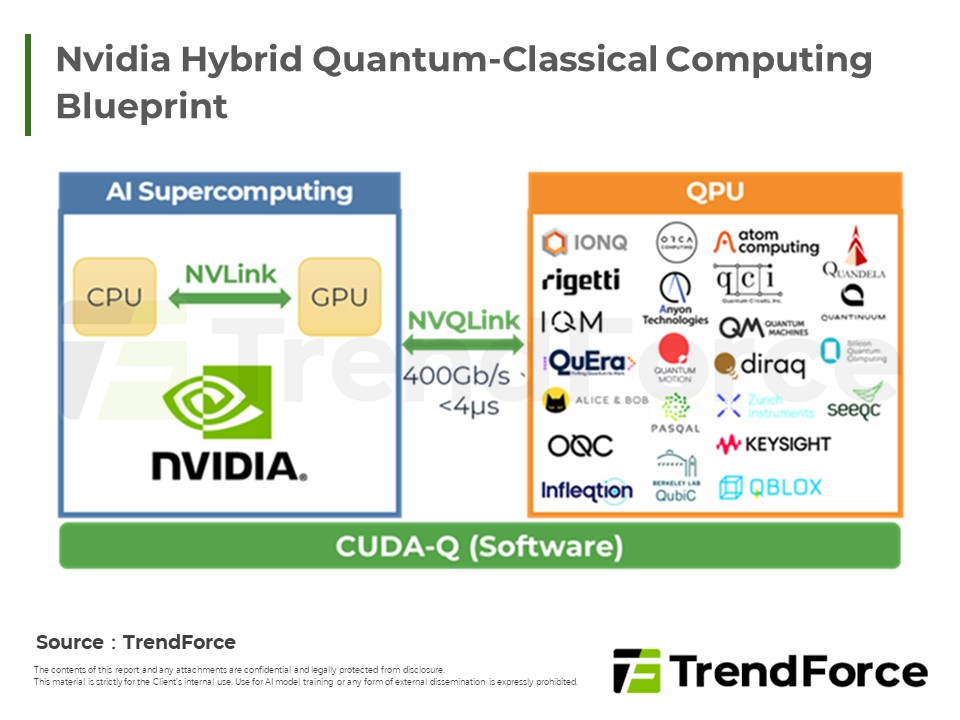

2026 Cloud AI Outlook: NA Hyperscalers Target GPU & ASICs

2025/11/05

Selected Topics

PDF

Selected TopicsRelated Reports

Download Report

USD $2,000

Membership

- Selected Topics

- Selected Topics-0013_Era of Machine Data: Analysis on Training and Inference Applications of AI Servers

Spotlight Report

-

AI Reshapes Memory: Market Revenue to Peak by 2027

2026/01/20

Selected Topics

PDF

-

DRAM/NAND Flash 2026 Capex: AI-Driven Revisions, Capacity Limited

2025/11/07

Selected Topics

PDF

-

High Server DRAM Demand Drives Expansion by Major Suppliers

2025/11/24

Selected Topics

PDF

-

CSP CapEx Fuels 12.8% Server Growth: 2026 Forecast

2025/12/18

Selected Topics

PDF

-

MLC Supply Cliff: Majors Exit & MXIC's Gain

2026/01/06

Selected Topics

PDF

-

2026 Cloud AI Outlook: NA Hyperscalers Target GPU & ASICs

2025/11/05

Selected Topics

PDF