Development of AI Servers and GPGPU Will Flourish in Era of Parallel Computing

Last Modified

2023-06-08

Update Frequency

Not

Format

Summary

Values of AI servers and corresponding computing cores GPGPU (General-Purpose Computing on Graphics Processing Unit) in parallel computing as LLM (Large Language Model), which excels in NLP (Natural Language Processing), and diffusion model, which excels in image processing, march towards mass commercialization, have become essential drivers for the human society in improving productivity through technical advancement.

Among which, AI servers possess a higher degree of customization compared to that of cloud servers. Taiwanese ODMs, including Foxconn Industrial Internet, Inventec, MiTAC, Wistron, Wiwynn, and Quanta, are once again portraying essential roles in the supply chain for AI servers with their capabilities in customization, while server OEMs such as Dell, Gigabyte, HPE, Lenovo, and Supermicro are relying on AI server solutions as important growth dynamics in the global server market amidst the post-pandemic era.

Table of Contents

1. AI Server Supply Chain

(1) ODM Direct for AI Servers

(2) OEM Mode for AI Servers

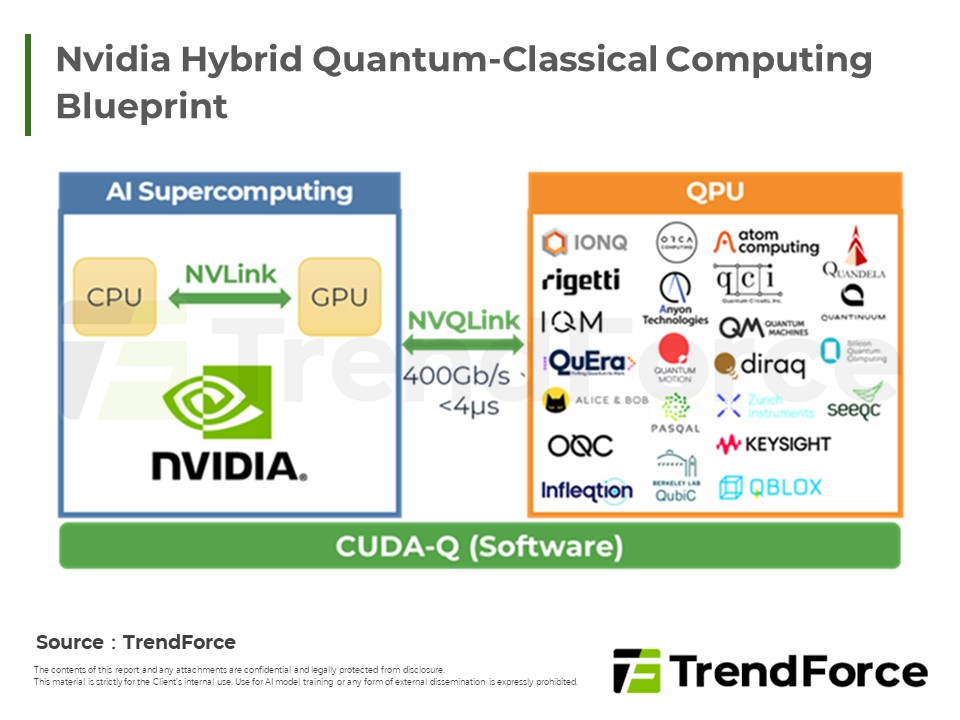

2. Three Major Suppliers of GPGPU for AI Servers: NVIDIA, AMD, Intel

(1) NVIDIA’s Data Center GPU

(2) AMD’s Instinct MI Accelerator

(3) Intel’s Data Center GPU Flex/Max

3. TRI’s View

(1) Production and sales of AI servers can be largely divided into ODM direct and OEM mode, with the

major difference being the fewer segments of production and sales for the former

(2) Three Major CPU Suppliers NVIDIA, AMD, Intel to Expedite Core Architecture Designs, Hardware

Configurations, Packaging Technology, and High-Speed Interconnection Technology for Respective

GPGPU Products

<Total Pages:11>

Category: Semiconductors

Spotlight Report

-

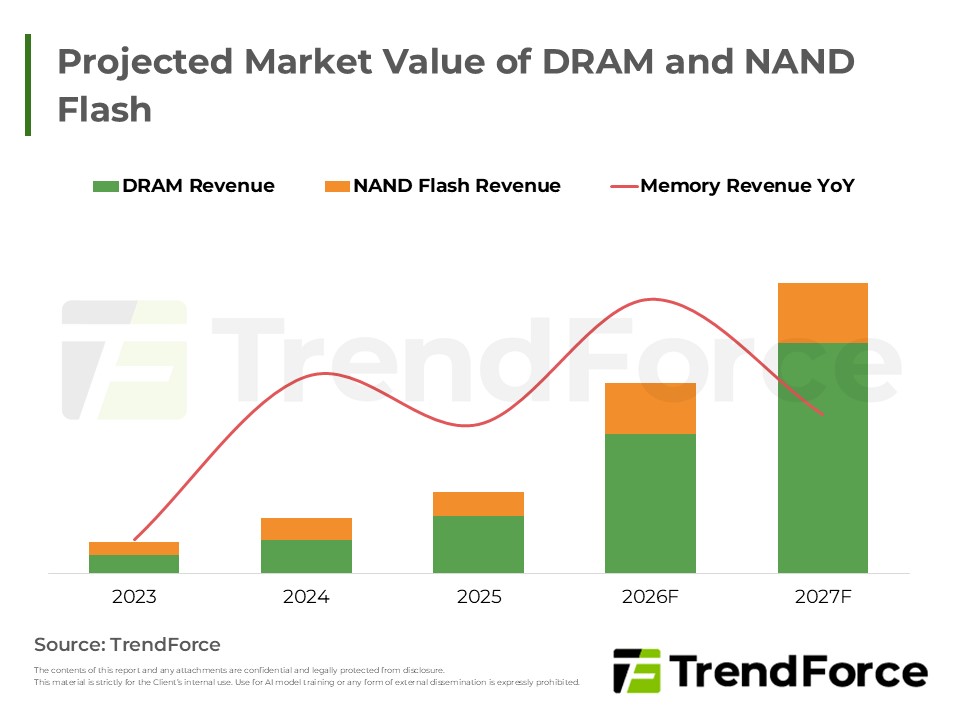

AI Reshapes Memory: Market Revenue to Peak by 2027

2026/01/20

Selected Topics

PDF

-

High Server DRAM Demand Drives Expansion by Major Suppliers

2025/11/24

Selected Topics

PDF

-

CSP CapEx Fuels 12.8% Server Growth: 2026 Forecast

2025/12/18

Selected Topics

PDF

-

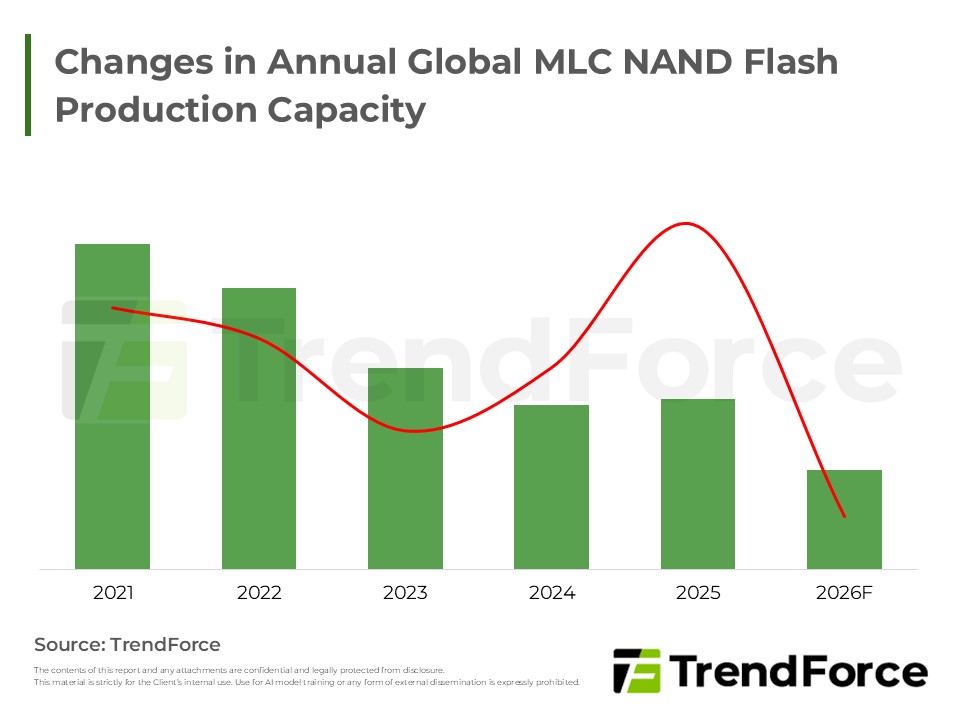

MLC Supply Cliff: Majors Exit & MXIC's Gain

2026/01/06

Selected Topics

PDF

-

Smartphone Production May Drop Over 15%: 2026 Memory Surge Ignites Cost Storm

2026/01/23

Selected Topics

PDF

-

DRAM/NAND Flash 2026 Capex: AI-Driven Revisions, Capacity Limited

2025/11/07

Selected Topics

PDF

Selected TopicsRelated Reports

Download Report

USD $200

Membership

- Selected Topics

- [Special Discount] Selected Topics-0020_Development of AI Servers and GPGPU Will Flourish in Era of Parallel Computing

Spotlight Report

-

AI Reshapes Memory: Market Revenue to Peak by 2027

2026/01/20

Selected Topics

PDF

-

High Server DRAM Demand Drives Expansion by Major Suppliers

2025/11/24

Selected Topics

PDF

-

CSP CapEx Fuels 12.8% Server Growth: 2026 Forecast

2025/12/18

Selected Topics

PDF

-

MLC Supply Cliff: Majors Exit & MXIC's Gain

2026/01/06

Selected Topics

PDF

-

Smartphone Production May Drop Over 15%: 2026 Memory Surge Ignites Cost Storm

2026/01/23

Selected Topics

PDF

-

DRAM/NAND Flash 2026 Capex: AI-Driven Revisions, Capacity Limited

2025/11/07

Selected Topics

PDF